Proxmox VE 8 is without doubt one of the greatest open-source and free Sort-I hypervisors on the market for operating QEMU/KVM digital machines (VMs) and LXC containers. It has a pleasant internet administration interface and loads of options.

Some of the wonderful options of Proxmox VE is that it may well passthrough PCI/PCIE units (i.e. an NVIDIA GPU) out of your pc to Proxmox VE digital machines (VMs). The PCI/PCIE passthrough is getting higher and higher with newer Proxmox VE releases. On the time of this writing, the most recent model of Proxmox VE is Proxmox VE v8.1 and it has nice PCI/PCIE passthrough assist.

On this article, I’m going to point out you find out how to configure your Proxmox VE 8 host/server for PCI/PCIE passthrough and configure your NVIDIA GPU for PCIE passthrough on Proxmox VE 8 digital machines (VMs).

Desk of Contents

- Enabling Virtualization from the BIOS/UEFI Firmware of Your Motherboard

- Putting in Proxmox VE 8

- Enabling Proxmox VE 8 Group Repositories

- Putting in Updates on Proxmox VE 8

- Enabling IOMMU from the BIOS/UEFI Firmware of Your Motherboard

- Enabling IOMMU on Proxmox VE 8

- Verifying if IOMMU is Enabled on Proxmox VE 8

- Loading VFIO Kernel Modules on Proxmox VE 8

- Itemizing IOMMU Teams on Proxmox VE 8

- Checking if Your NVIDIA GPU Can Be Passthrough to a Proxmox VE 8 Digital Machine (VM)

- Checking for the Kernel Modules to Blacklist for PCI/PCIE Passthrough on Proxmox VE 8

- Blacklisting Required Kernel Modules for PCI/PCIE Passthrough on Proxmox VE 8

- Configuring Your NVIDIA GPU to Use the VFIO Kernel Module on Proxmox VE 8

- Passthrough the NVIDIA GPU to a Proxmox VE 8 Digital Machine (VM)

- Nonetheless Having Issues with PCI/PCIE Passthrough on Proxmox VE 8 Digital Machines (VMs)?

- Conclusion

- References

Enabling Virtualization from the BIOS/UEFI Firmware of Your Motherboard

Earlier than you possibly can set up Proxmox VE 8 in your pc/server, you should allow the {hardware} virtualization characteristic of your processor from the BIOS/UEFI firmware of your motherboard. The method is completely different for various motherboards. So, in the event you want any help in enabling {hardware} virtualization in your motherboard, learn this text.

Putting in Proxmox VE 8

Proxmox VE 8 is free to obtain, set up, and use. Earlier than you get began, ensure to put in Proxmox VE 8 in your pc. Should you want any help on that, learn this text.

Enabling Proxmox VE 8 Group Repositories

After getting Proxmox VE 8 put in in your pc/server, ensure to allow the Proxmox VE 8 group bundle repositories.

By default, Proxmox VE 8 enterprise bundle repositories are enabled and also you gained’t be capable of get/set up updates and bug fixes from the enterprise repositories until you might have purchased Proxmox VE 8 enterprise licenses. So, if you wish to use Proxmox VE 8 without spending a dime, ensure to allow the Proxmox VE 8 group bundle repositories to get the most recent updates and bug fixes from Proxmox without spending a dime.

Putting in Updates on Proxmox VE 8

When you’ve enabled the Proxmox VE 8 group bundle repositories, ensure to put in all of the obtainable updates in your Proxmox VE 8 server.

Enabling IOMMU from the BIOS/UEFI Firmware of Your Motherboard

The IOMMU configuration is discovered in numerous areas in numerous motherboards. To allow IOMMU in your motherboard, learn this text.

Enabling IOMMU on Proxmox VE 8

As soon as the IOMMU is enabled on the {hardware} aspect, you additionally have to allow IOMMU from the software program aspect (from Proxmox VE 8).

To allow IOMMU from Proxmox VE 8, you might have the add the next kernel boot parameters:

| Processor Vendor | Kernel boot parameters so as to add |

| Intel | intel_iommu=on, iommu=pt |

| AMD | iommu=pt |

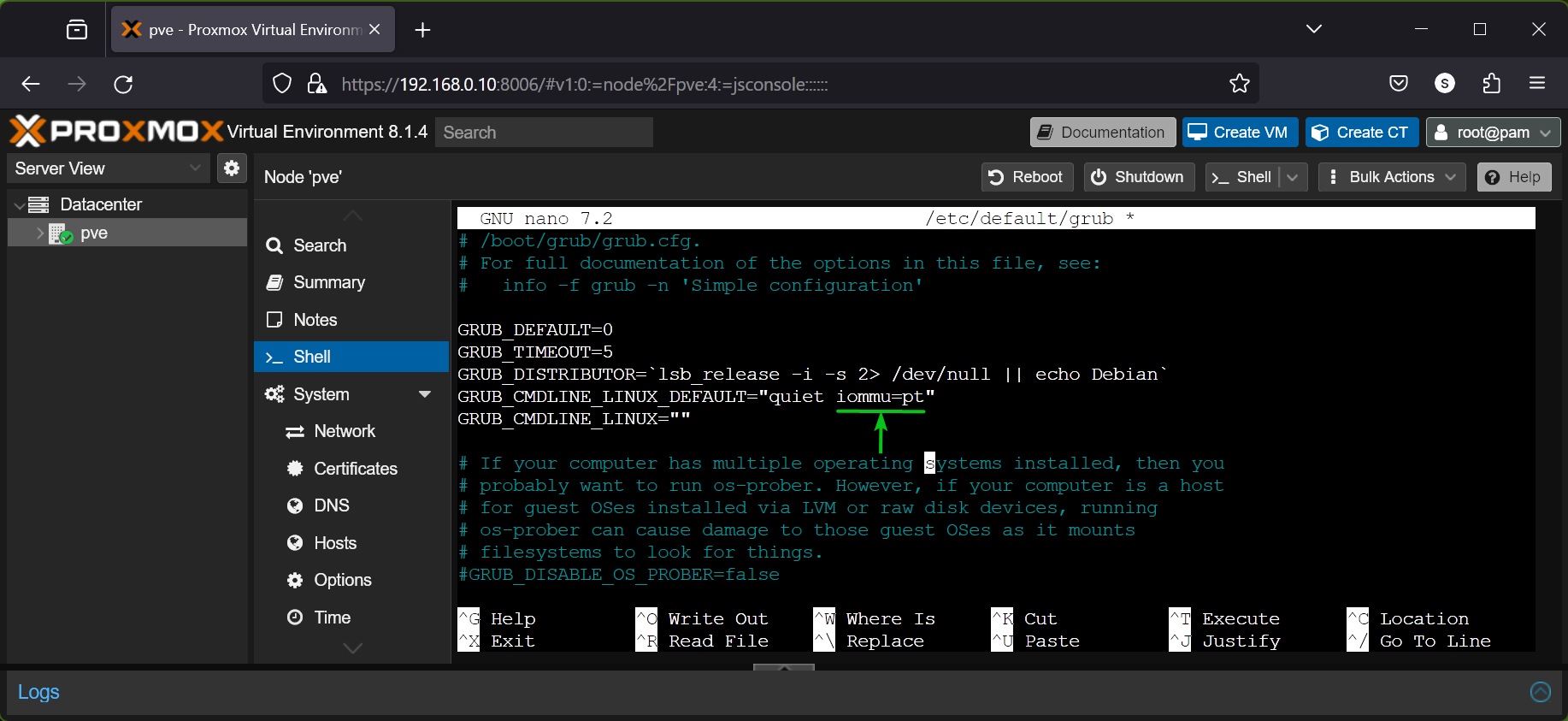

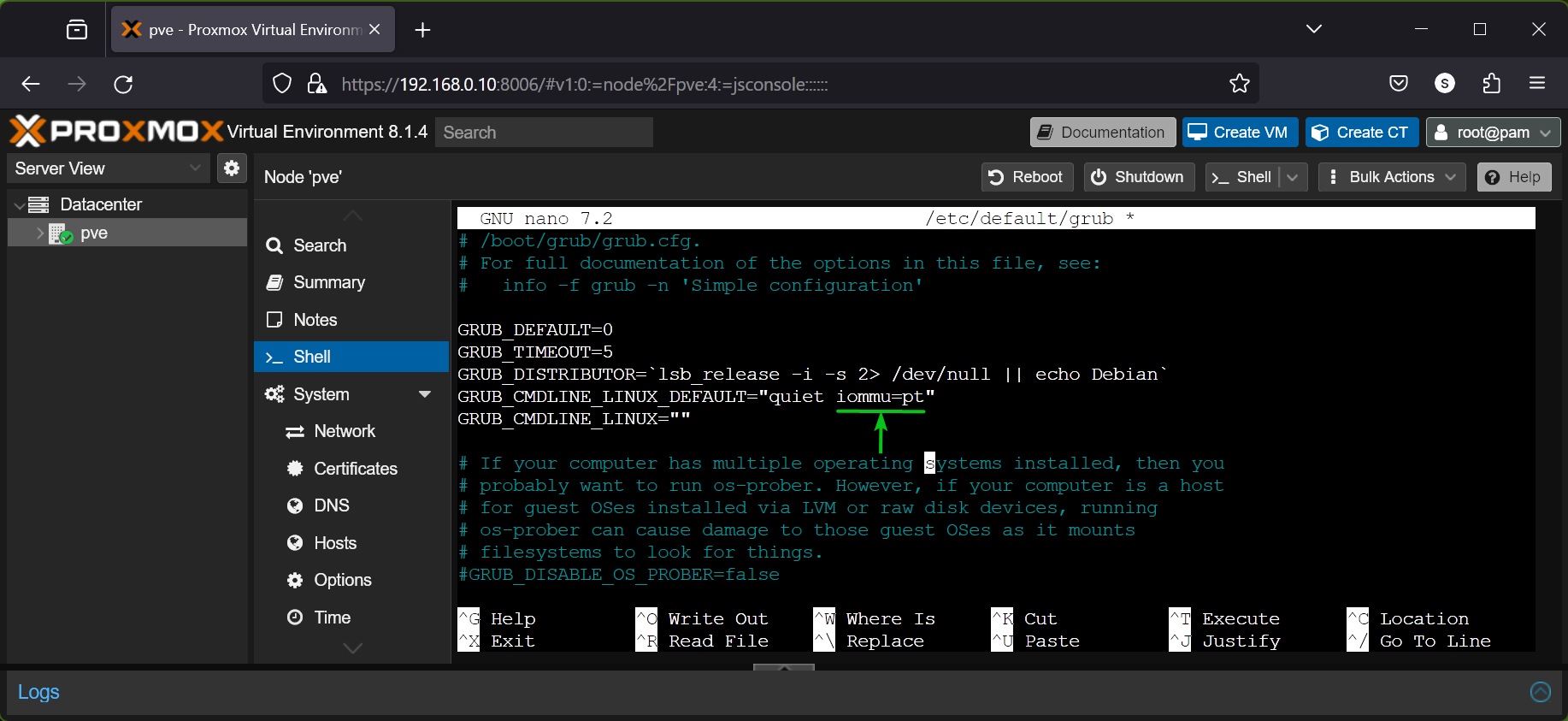

To switch the kernel boot parameters of Proxmox VE 8, open the /and so on/default/grub file with the nano textual content editor as follows:

On the finish of the GRUB_CMDLINE_LINUX_DEFAULT, add the required kernel boot parameters for enabling IOMMU relying on the processor you’re utilizing.

As I’m utilizing an AMD processor, I’ve added solely the kernel boot parameter iommu=pt on the finish of the GRUB_CMDLINE_LINUX_DEFAULT line within the /and so on/default/grub file.

When you’re accomplished, press

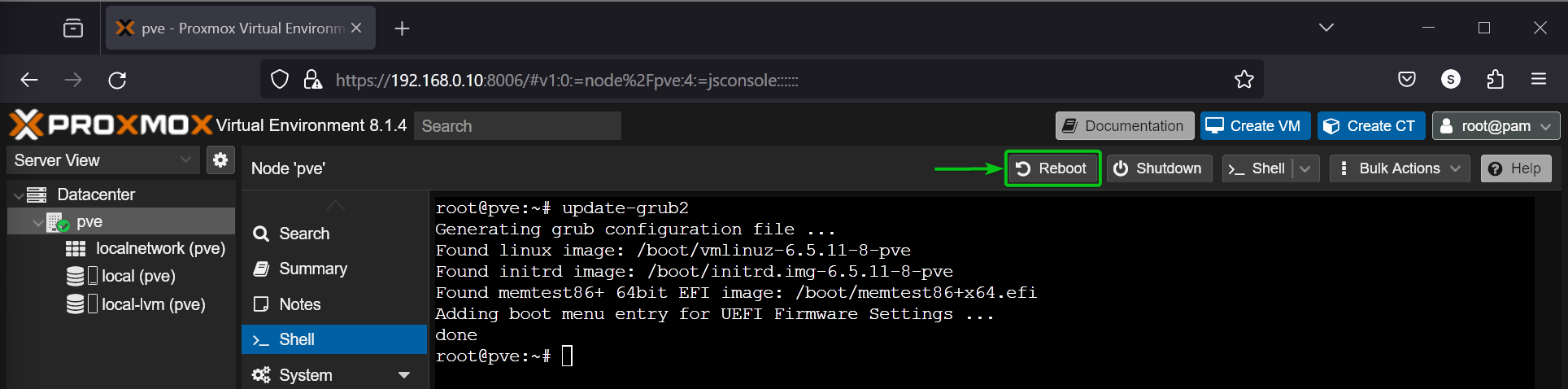

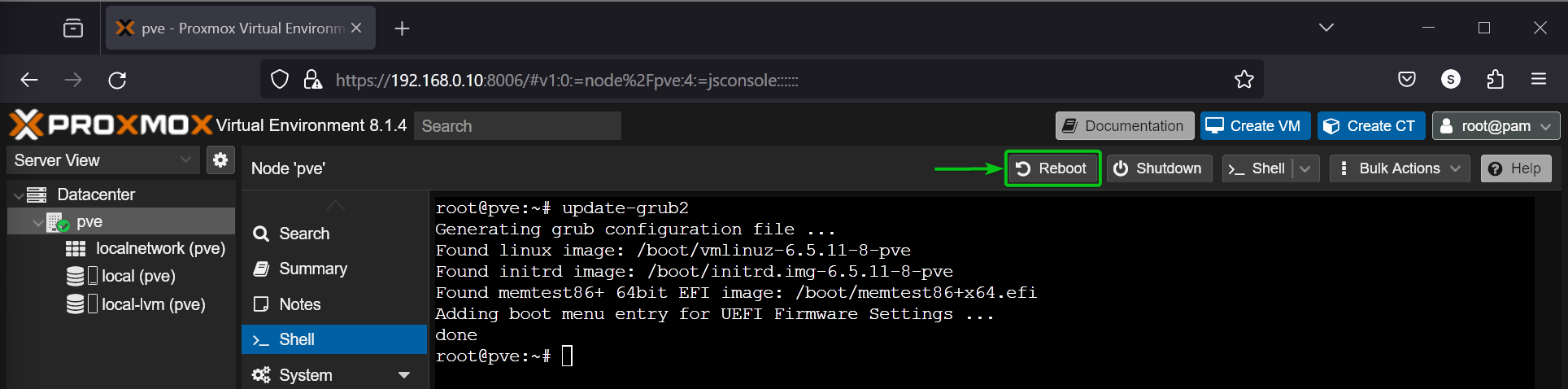

Now, replace the GRUB boot configurations with the next command:

As soon as the GRUB boot configurations are up to date, click on on Reboot to restart your Proxmox VE 8 server for the modifications to take impact.

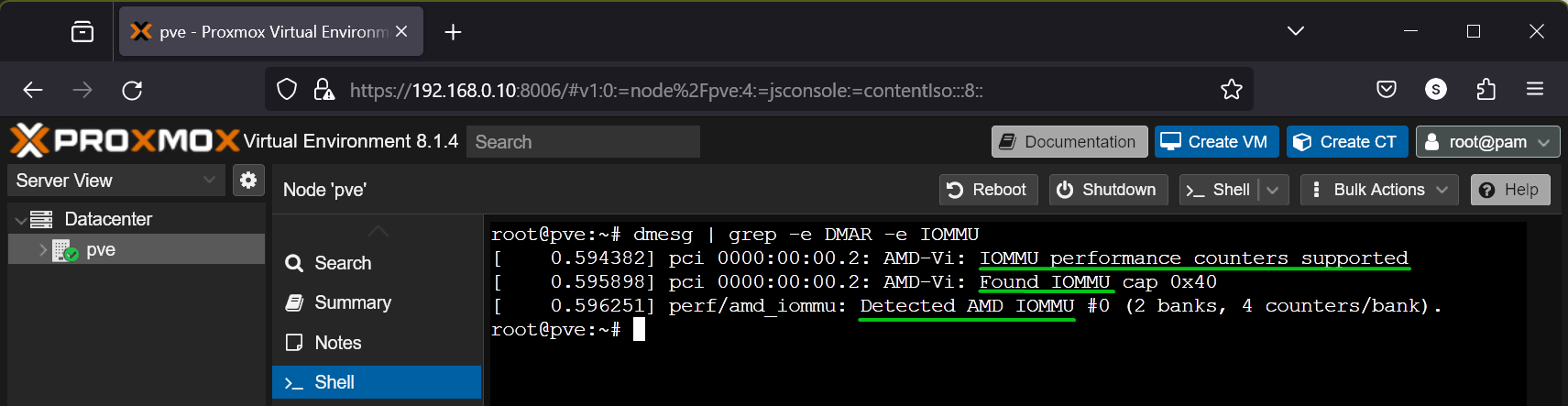

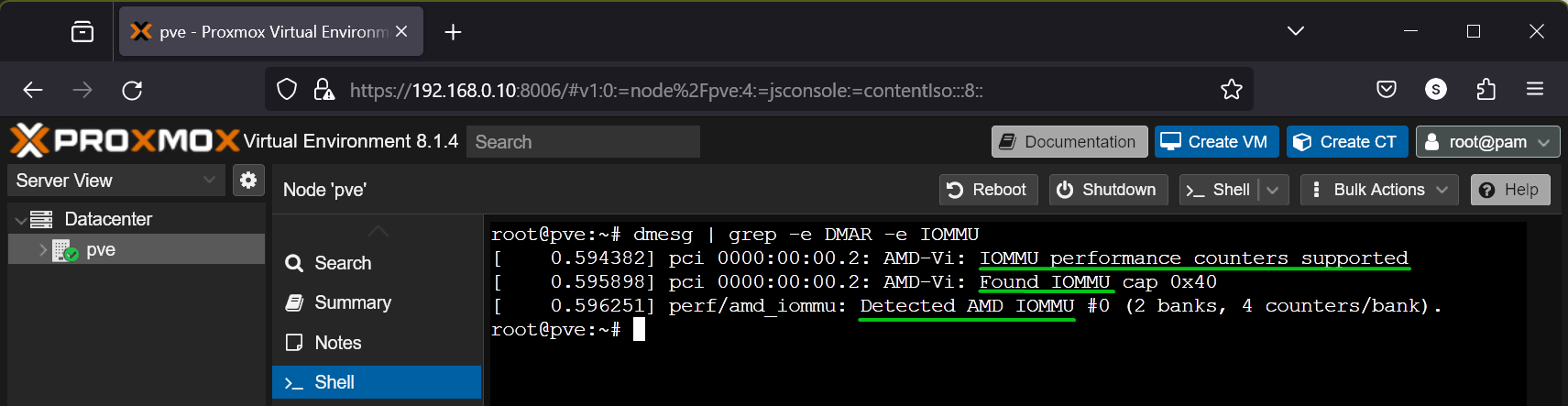

Verifying if IOMMU is Enabled on Proxmox VE 8

To confirm whether or not IOMMU is enabled on Proxmox VE 8, run the next command:

$ dmesg | grep -e DMAR -e IOMMU

If IOMMU is enabled, you will notice some outputs confirming that IOMMU is enabled.

If IOMMU will not be enabled, you might not see any outputs.

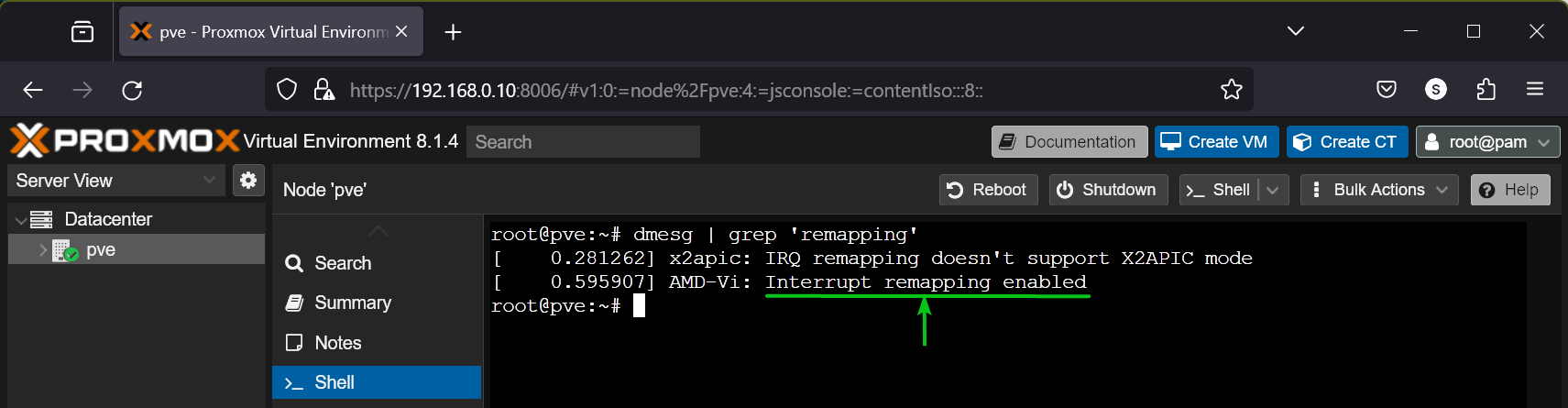

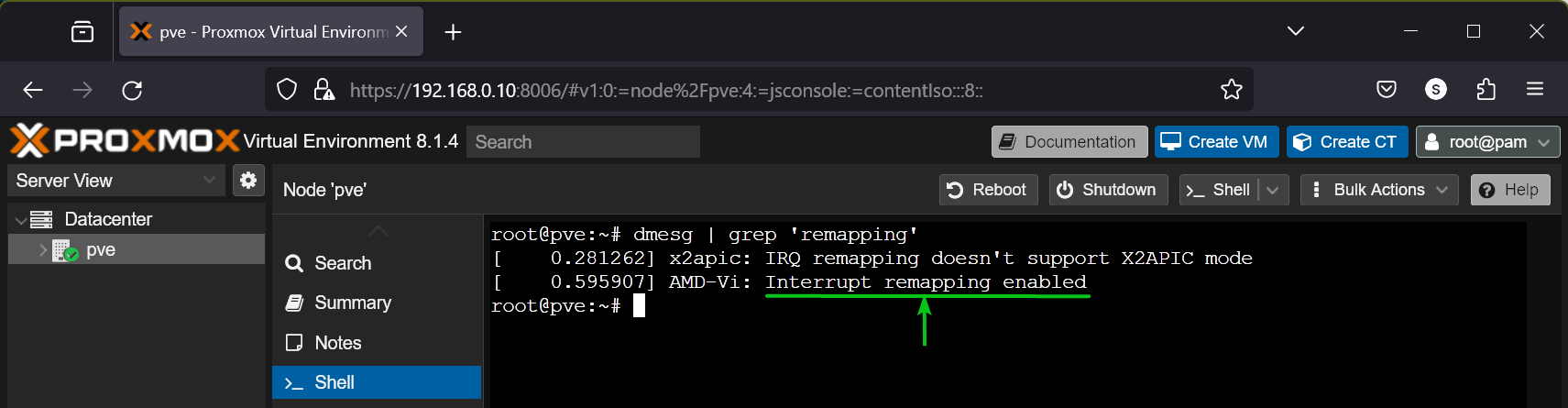

You additionally have to have the IOMMU Interrupt Remapping enabled for PCI/PCIE passthrough to work.

To examine if IOMMU Interrupt Remapping is enabled in your Proxmox VE 8 server, run the next command:

$ dmesg | grep ‘remapping’

As you possibly can see, IOMMU Interrupt Remapping is enabled on my Proxmox VE 8 server.

NOTE: Most fashionable AMD and Intel processors could have IOMMU Interrupt Remapping enabled. If for any purpose, you don’t have IOMMU Interrupt Remapping enabled, there’s a workaround. You must allow Unsafe Interrupts for VFIO. Learn this text for extra data on enabling Unsafe Interrupts in your Proxmox VE 8 server.

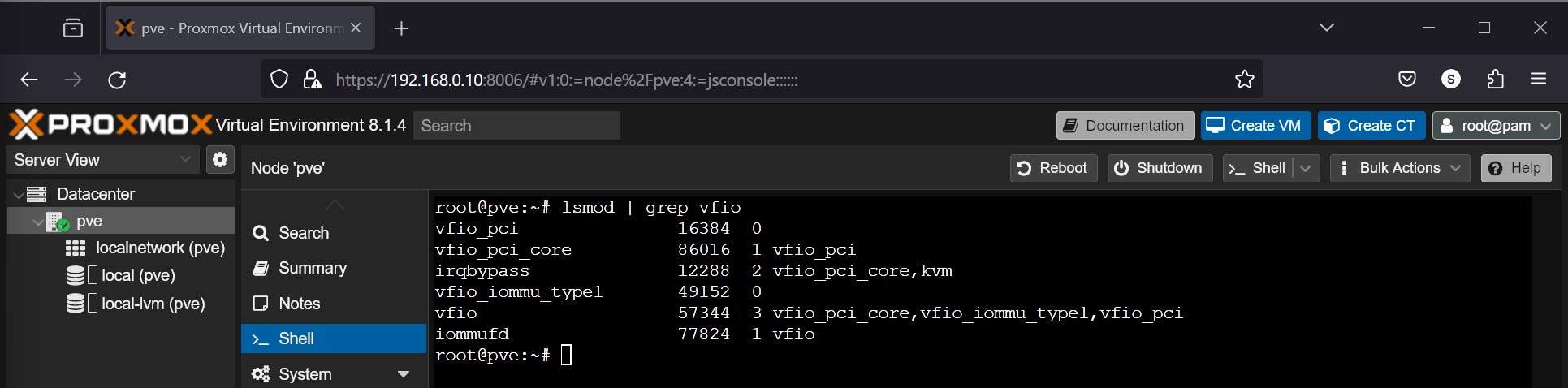

Loading VFIO Kernel Modules on Proxmox VE 8

The PCI/PCIE passthrough is finished primarily by the VFIO (Digital Operate I/O) kernel modules on Proxmox VE 8. The VFIO kernel modules are usually not loaded at boot time by default on Proxmox VE 8. However, it’s simple to load the VFIO kernel modules at boot time on Proxmox VE 8.

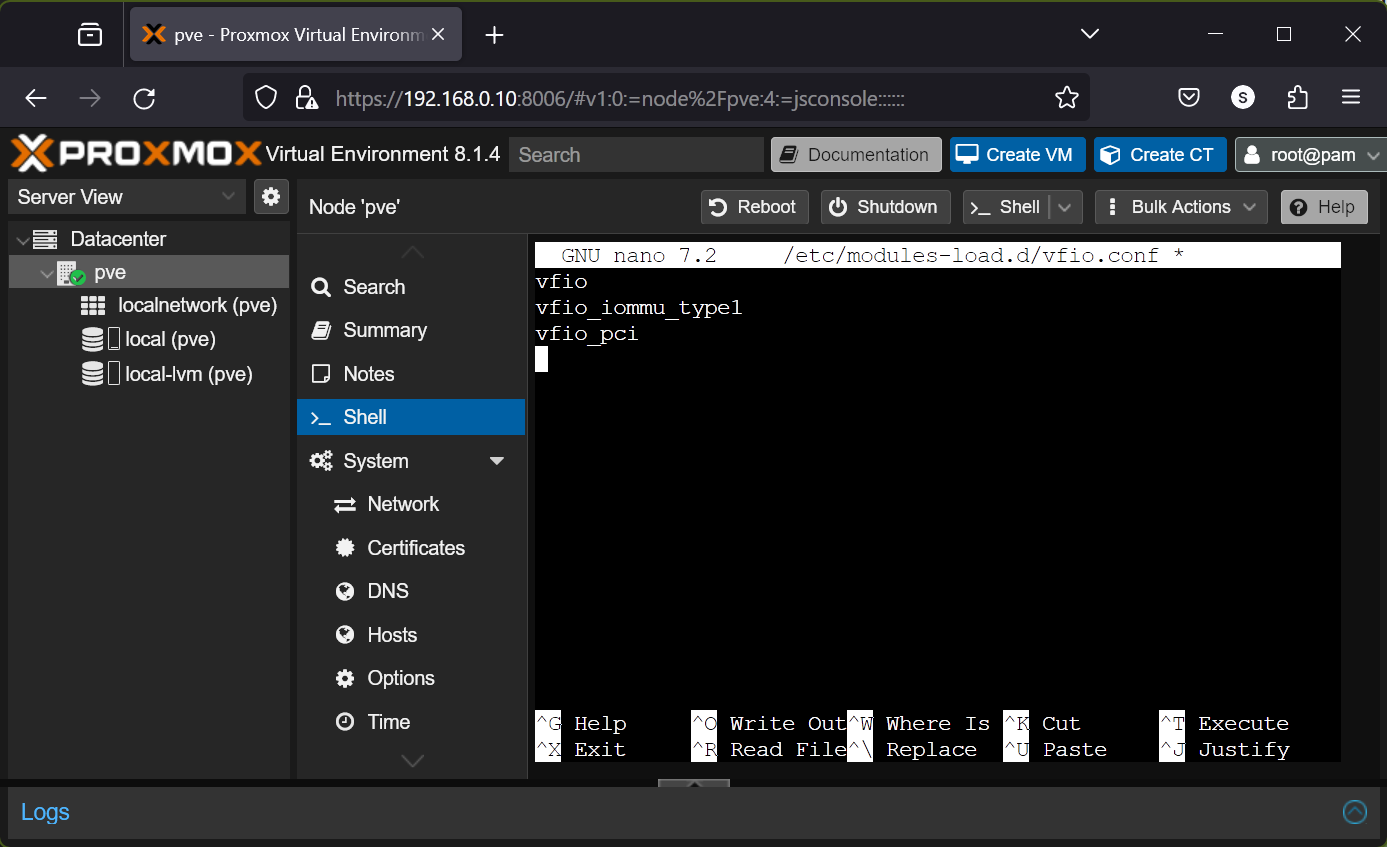

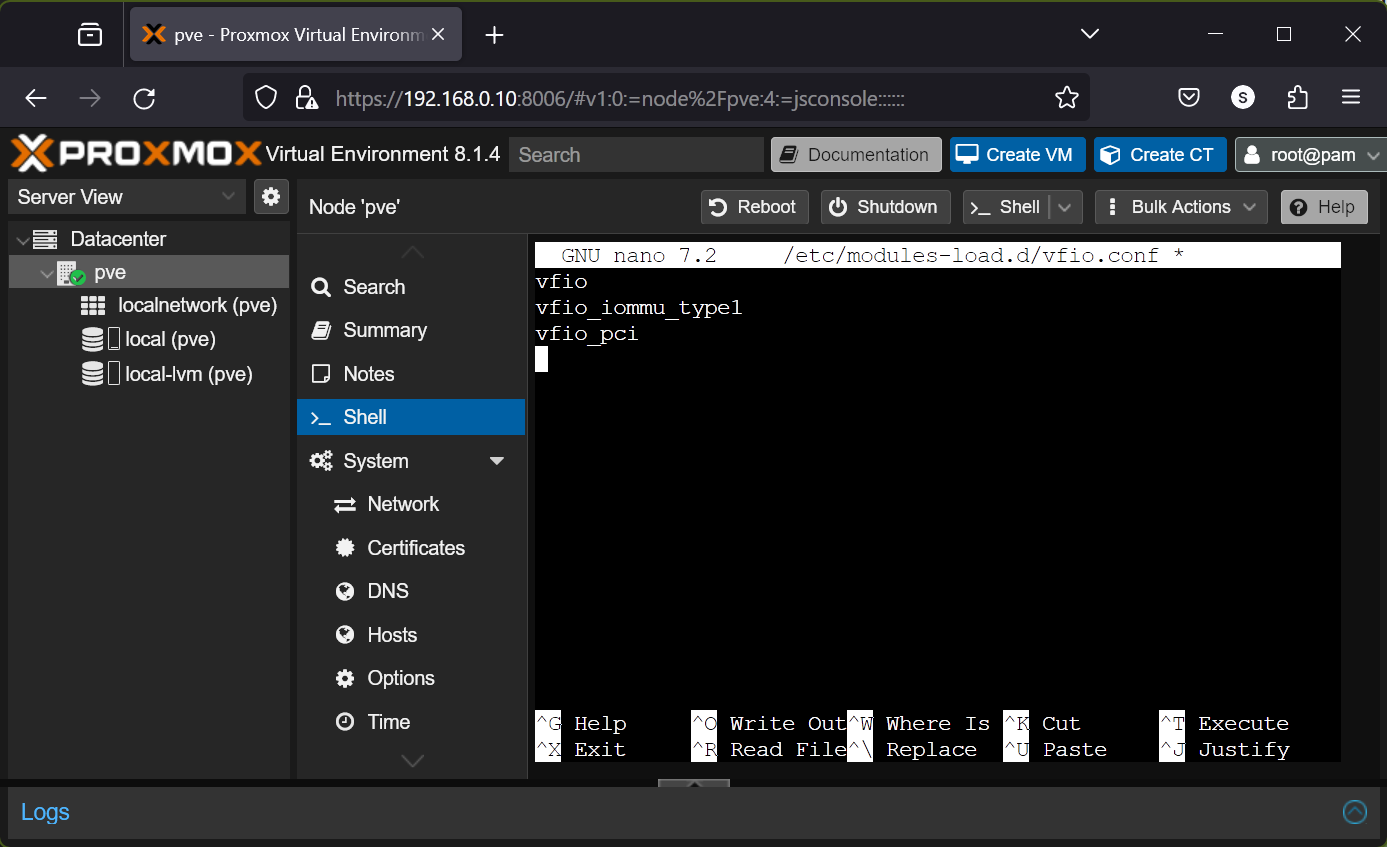

First, open the /and so on/modules-load.d/vfio.conf file with the nano textual content editor as follows:

$ nano /and so on/modules-load.d/vfio.conf

Sort within the following strains within the /and so on/modules-load.d/vfio.conf file.

vfio_iommu_type1

vfio_pci

When you’re accomplished, press

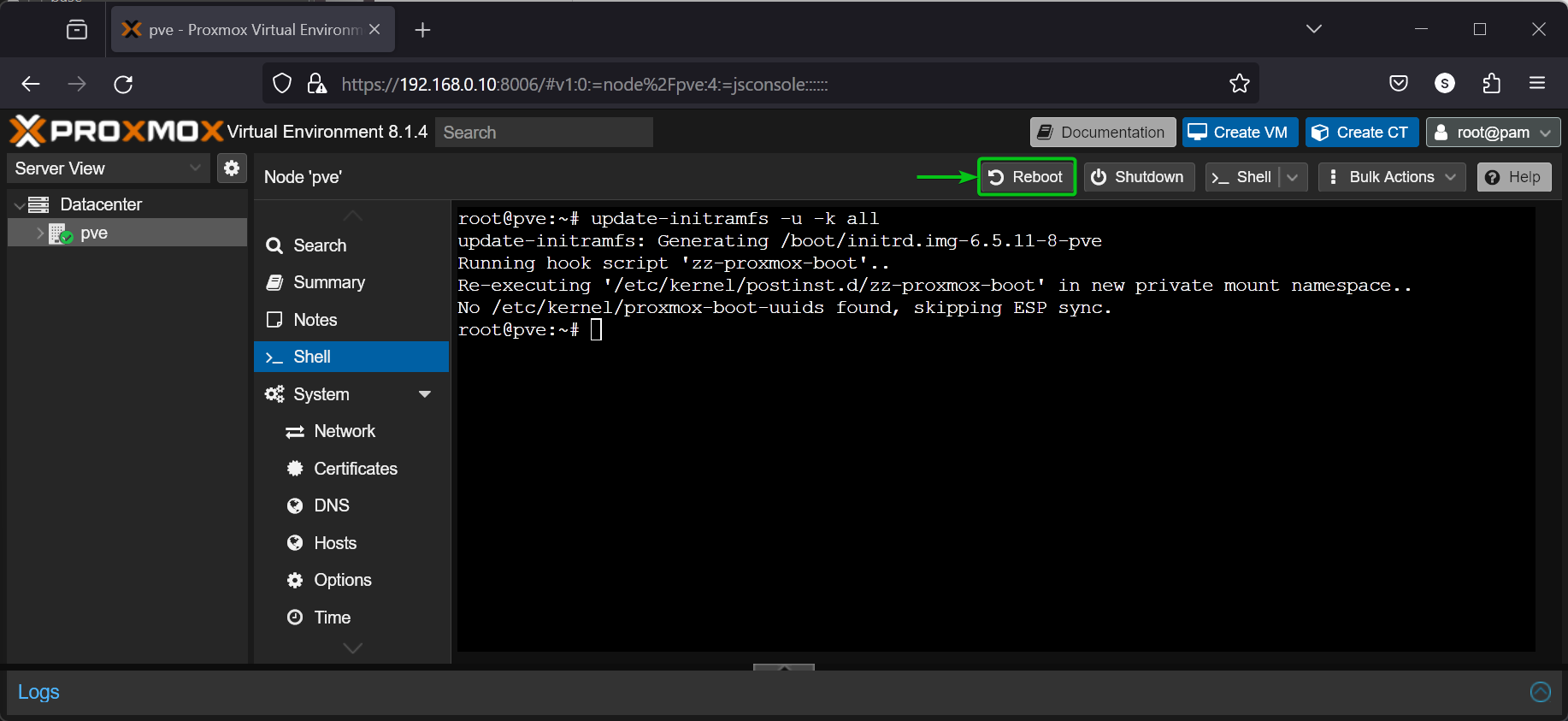

Now, replace the initramfs of your Proxmox VE 8 set up with the next command:

$ update-initramfs -u -k all

As soon as the initramfs is up to date, click on on Reboot to restart your Proxmox VE 8 server for the modifications to take impact.

As soon as your Proxmox VE 8 server boots, it’s best to see that each one the required VFIO kernel modules are loaded.

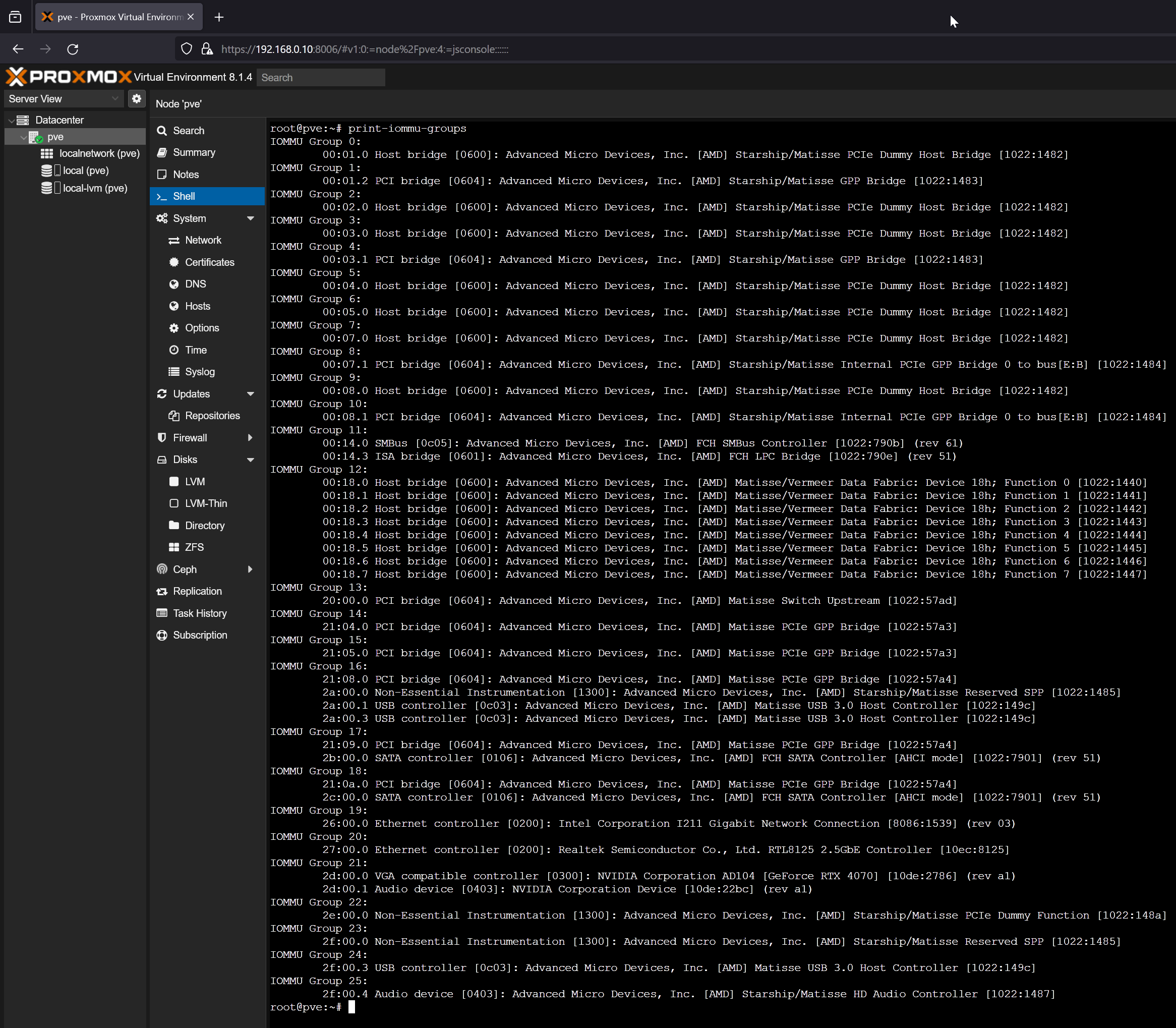

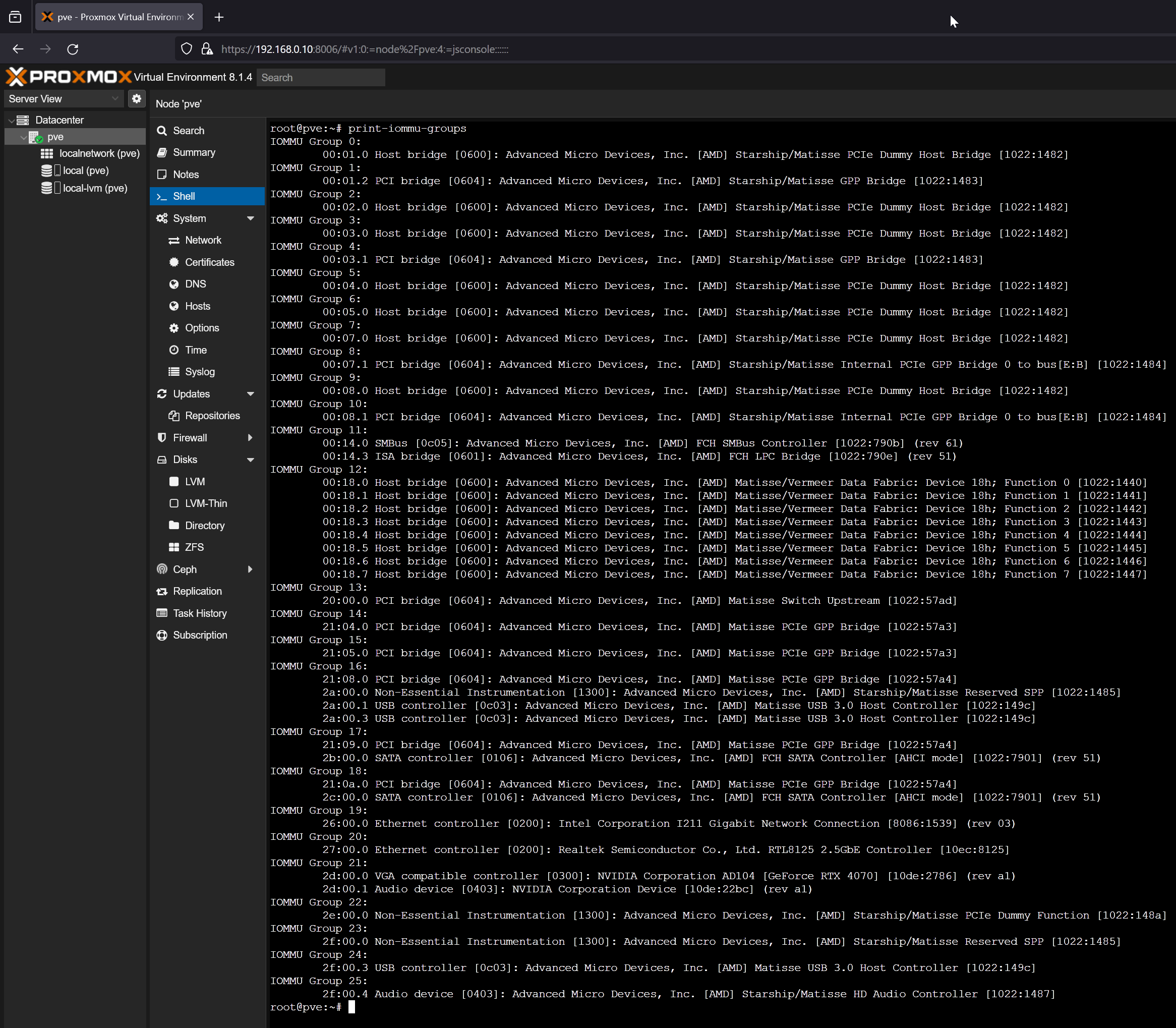

Itemizing IOMMU Teams on Proxmox VE 8

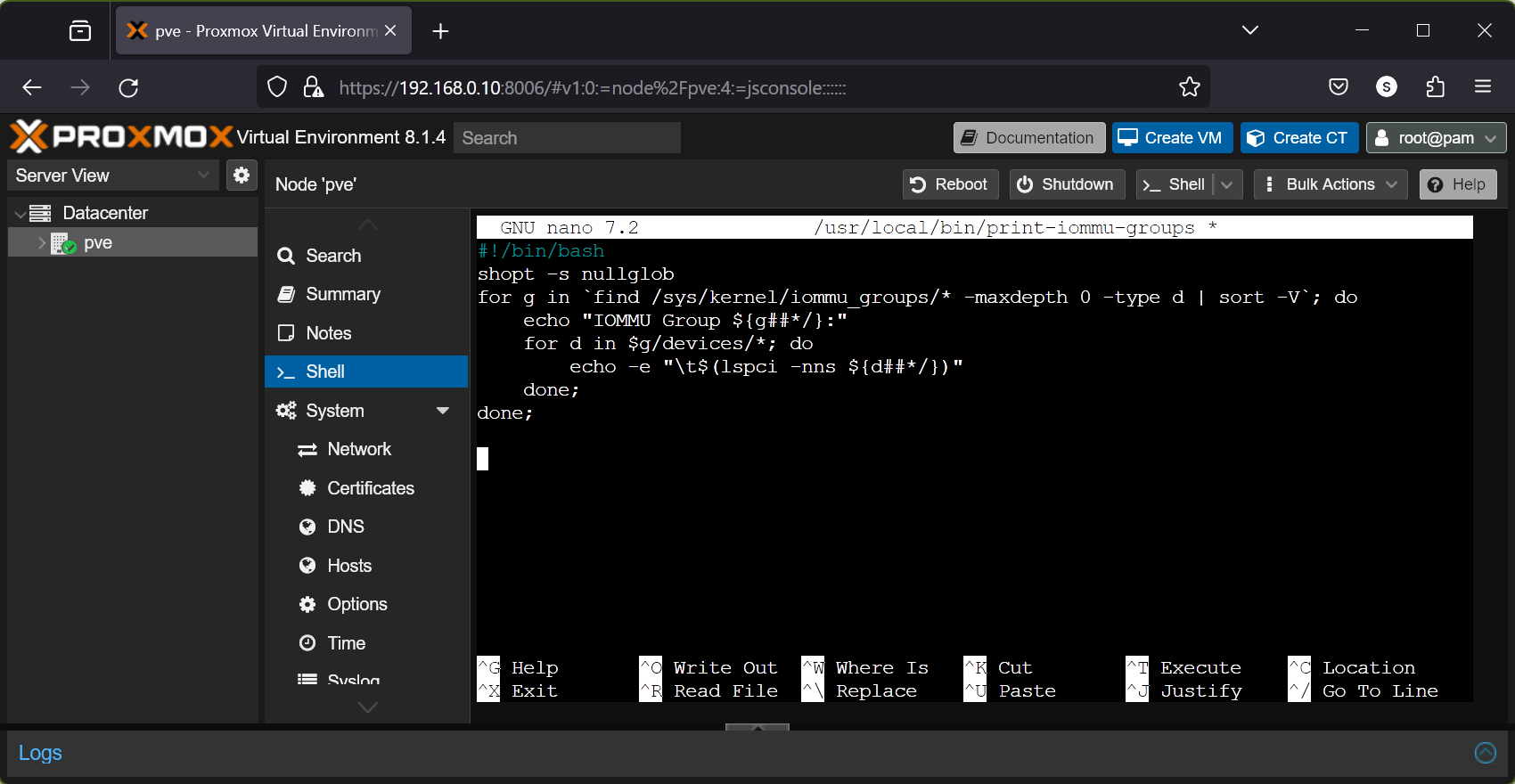

To passthrough PCI/PCIE units on Proxmox VE 8 digital machines (VMs), you will want to examine the IOMMU teams of your PCI/PCIE units fairly incessantly. To make checking for IOMMU teams simpler, I made a decision to put in writing a shell script (I received it from GitHub, however I can’t keep in mind the identify of the unique poster) within the path /usr/native/bin/print-iommu-groups in order that I can simply run print-iommu-groups command and it’ll print the IOMMU teams on the Proxmox VE 8 shell.

First, create a brand new file print-iommu-groups within the path /usr/native/bin and open it with the nano textual content editor as follows:

$ nano /usr/native/bin/print-iommu-groups

Sort within the following strains within the print-iommu-groups file:

shopt -s nullglob

for g in `discover /sys/kernel/iommu_groups/* -maxdepth 0 -type d | kind -V`; do

echo “IOMMU Group ${g##*/}:”

for d in $g/units/*; do

echo -e “t$(lspci -nns ${d##*/})“

accomplished;

accomplished;

When you’re accomplished, press

Make the print-iommu-groups script file executable with the next command:

$ chmod +x /usr/native/bin/print-iommu-groups

Now, you possibly can run the print-iommu-groups command as follows to print the IOMMU teams of the PCI/PCIE units put in in your Proxmox VE 8 server:

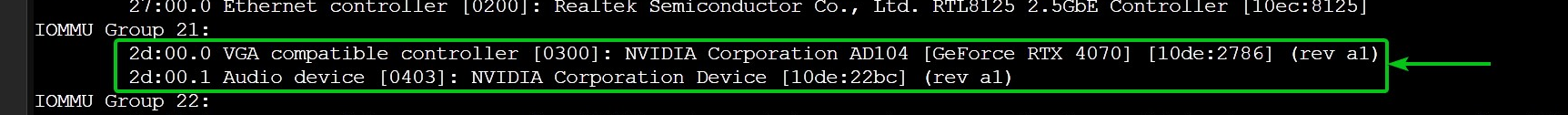

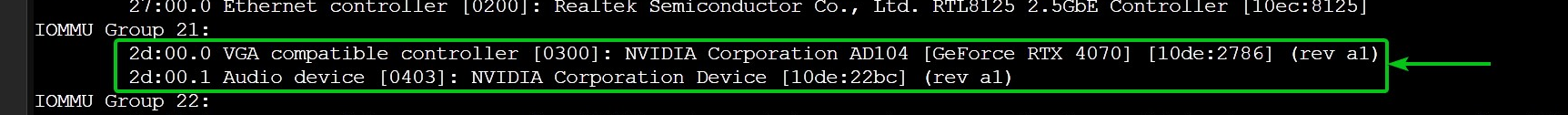

As you possibly can see, the IOMMU teams of the PCI/PCIE units put in on my Proxmox VE 8 server are printed.

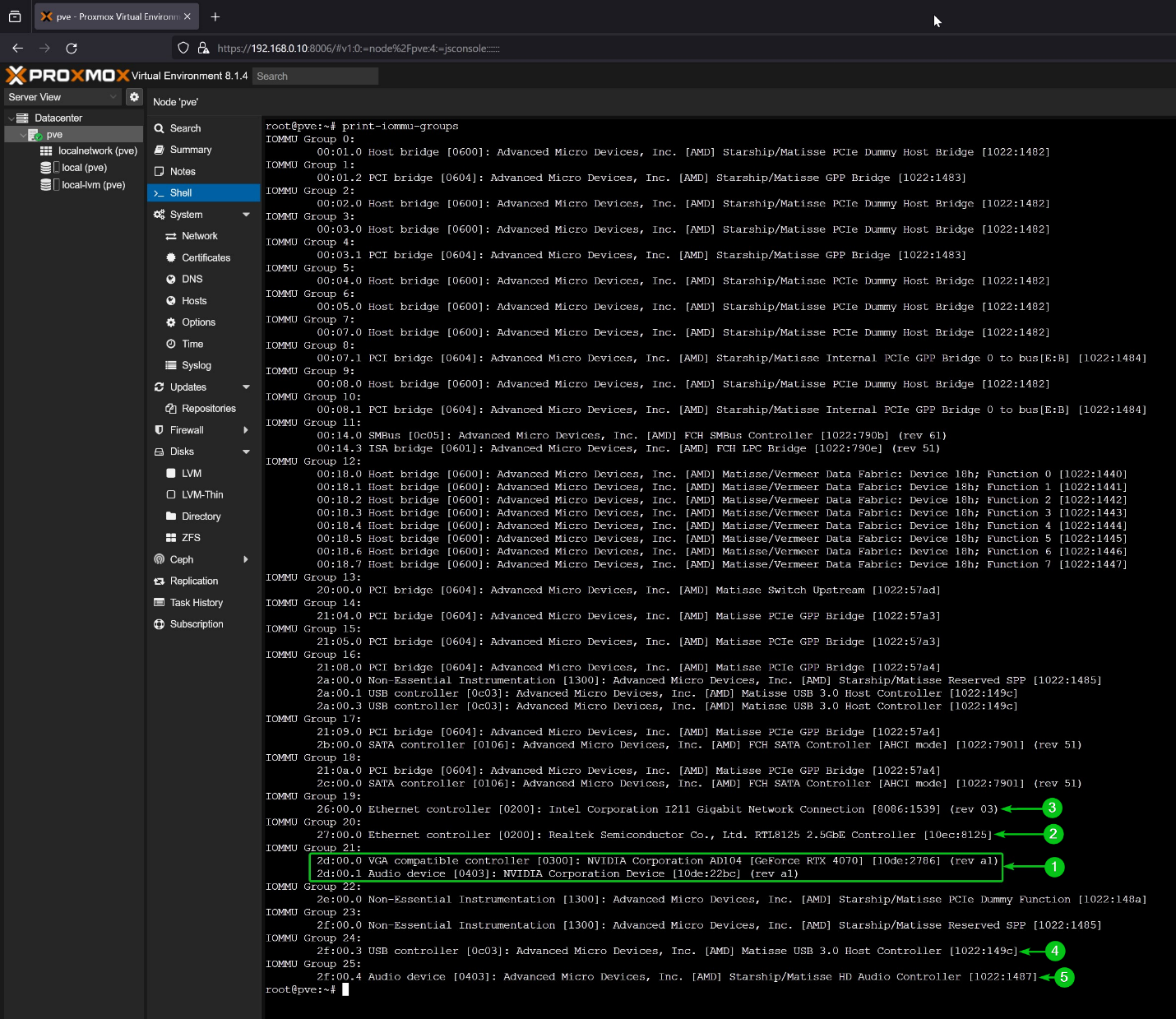

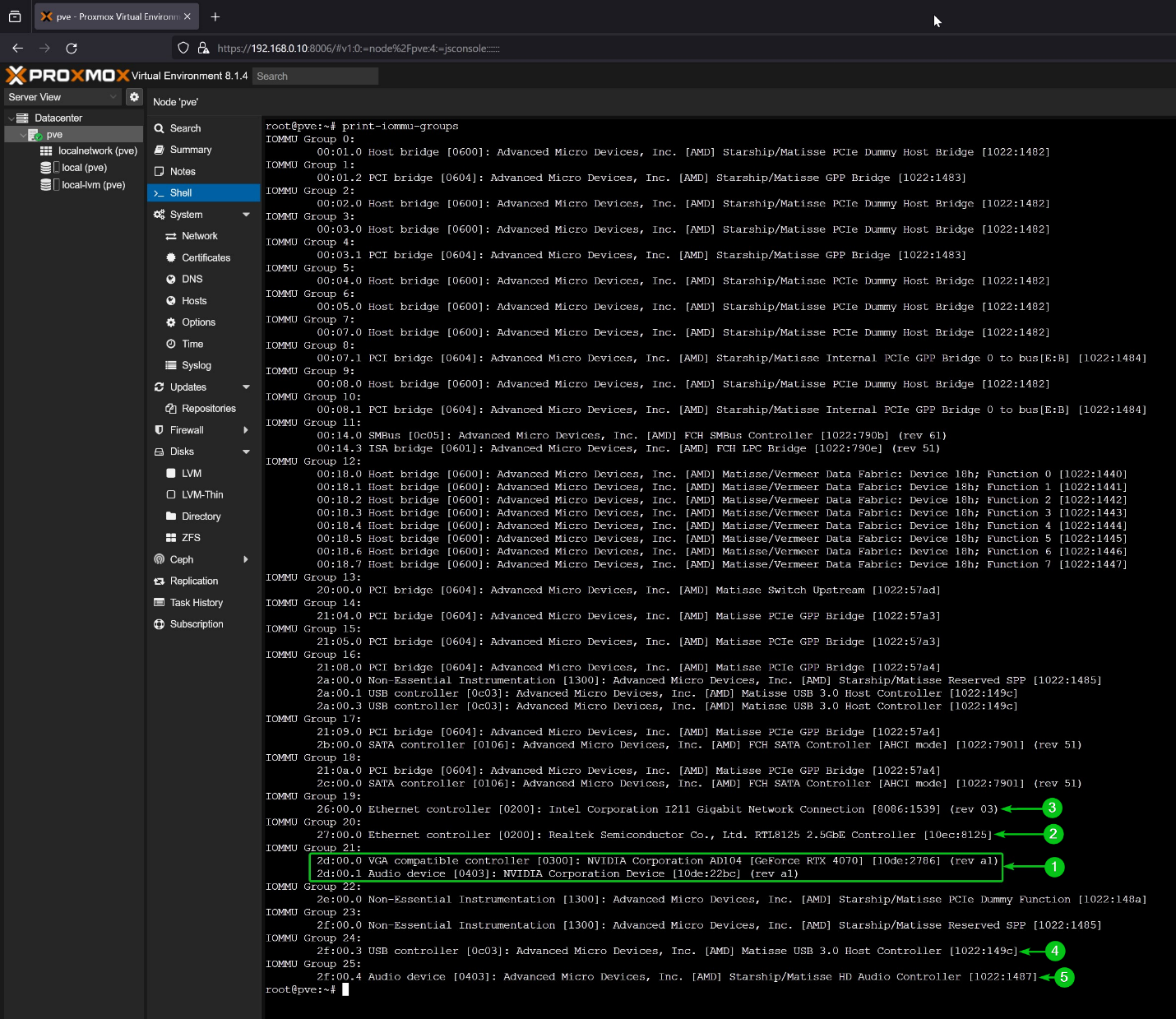

Checking if Your NVIDIA GPU Can Be Passthrough to a Proxmox VE 8 Digital Machine (VM)

To passthrough a PCI/PCIE machine to a Proxmox VE 8 digital machine (VM), it should be in its personal IOMMU group. If 2 or extra PCI/PCIE units share an IOMMU group, you possibly can’t passthrough any of the PCI/PCIE units of that IOMMU group to any Proxmox VE 8 digital machines (VMs).

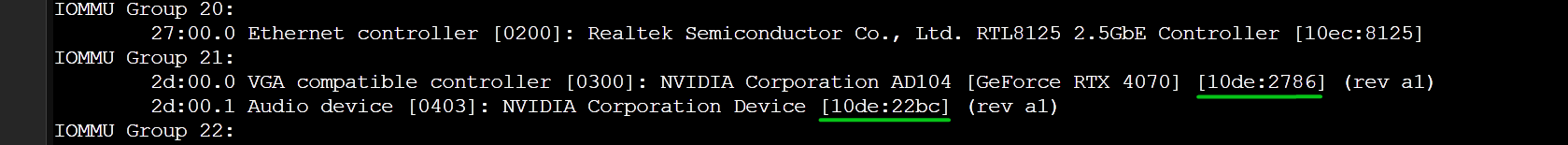

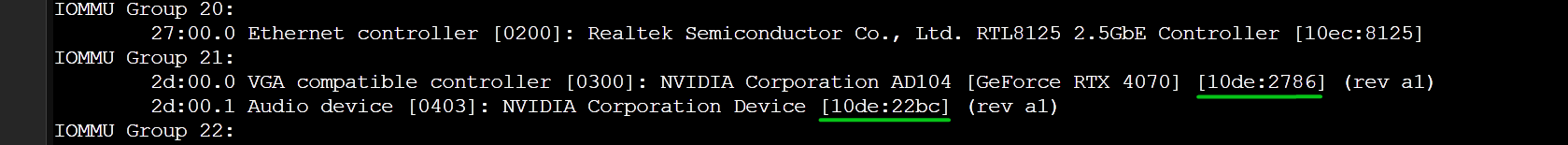

So, in case your NVIDIA GPU and its audio machine are by itself IOMMU group, you possibly can passthrough the NVIDIA GPU to any Proxmox VE 8 digital machines (VMs).

On my Proxmox VE 8 server, I’m utilizing an MSI X570 ACE motherboard paired with a Ryzen 3900X processor and Gigabyte RTX 4070 NVIDIA GPU. Based on the IOMMU teams of my system, I can passthrough the NVIDIA RTX 4070 GPU (IOMMU Group 21), RTL8125 2.5Gbe Ethernet Controller (IOMMU Group 20), Intel I211 Gigabit Ethernet Controller (IOMMU Group 19), a USB 3.0 controller (IOMMU Group 24), and the Onboard HD Audio Controller (IOMMU Group 25).

As the primary focus of this text is configuring Proxmox VE 8 for passing by way of the NVIDIA GPU to Proxmox VE 8 digital machines, the NVIDIA GPU and its Audio machine should be in its personal IOMMU group.

Checking for the Kernel Modules to Blacklist for PCI/PCIE Passthrough on Proxmox VE 8

To passthrough a PCI/PCIE machine on a Proxmox VE 8 digital machine (VM), you should be sure that Proxmox VE forces it to make use of the VFIO kernel module as an alternative of its authentic kernel module.

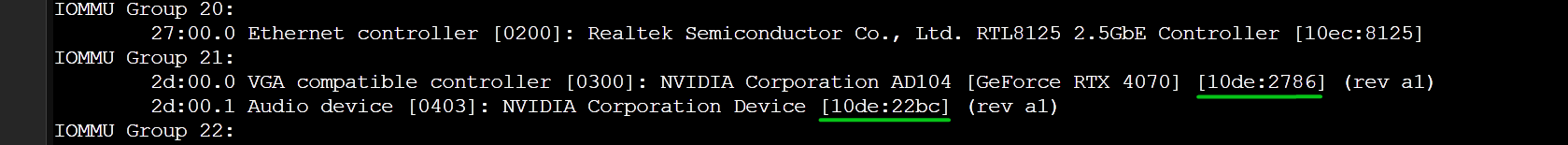

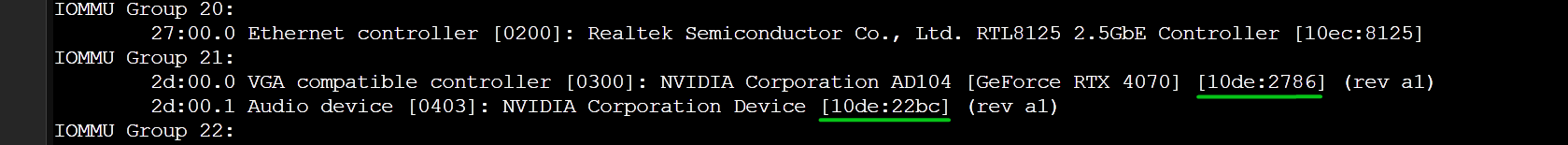

To search out out the kernel module your PCI/PCIE units are utilizing, you will want to know the seller ID and machine ID of those PCI/PCIE units. You will discover the seller ID and machine ID of the PCI/PCIE units utilizing the print-iommu-groups command.

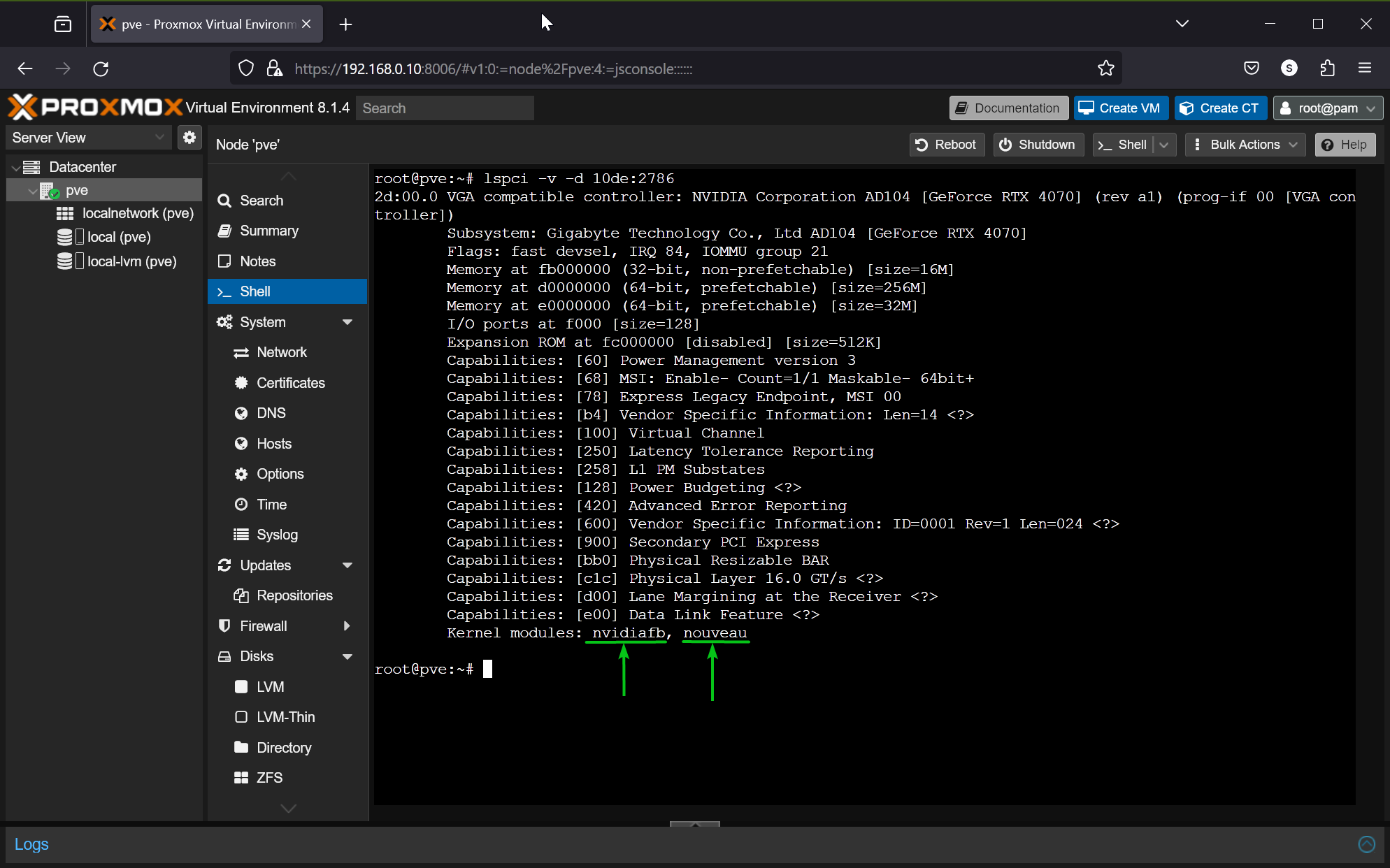

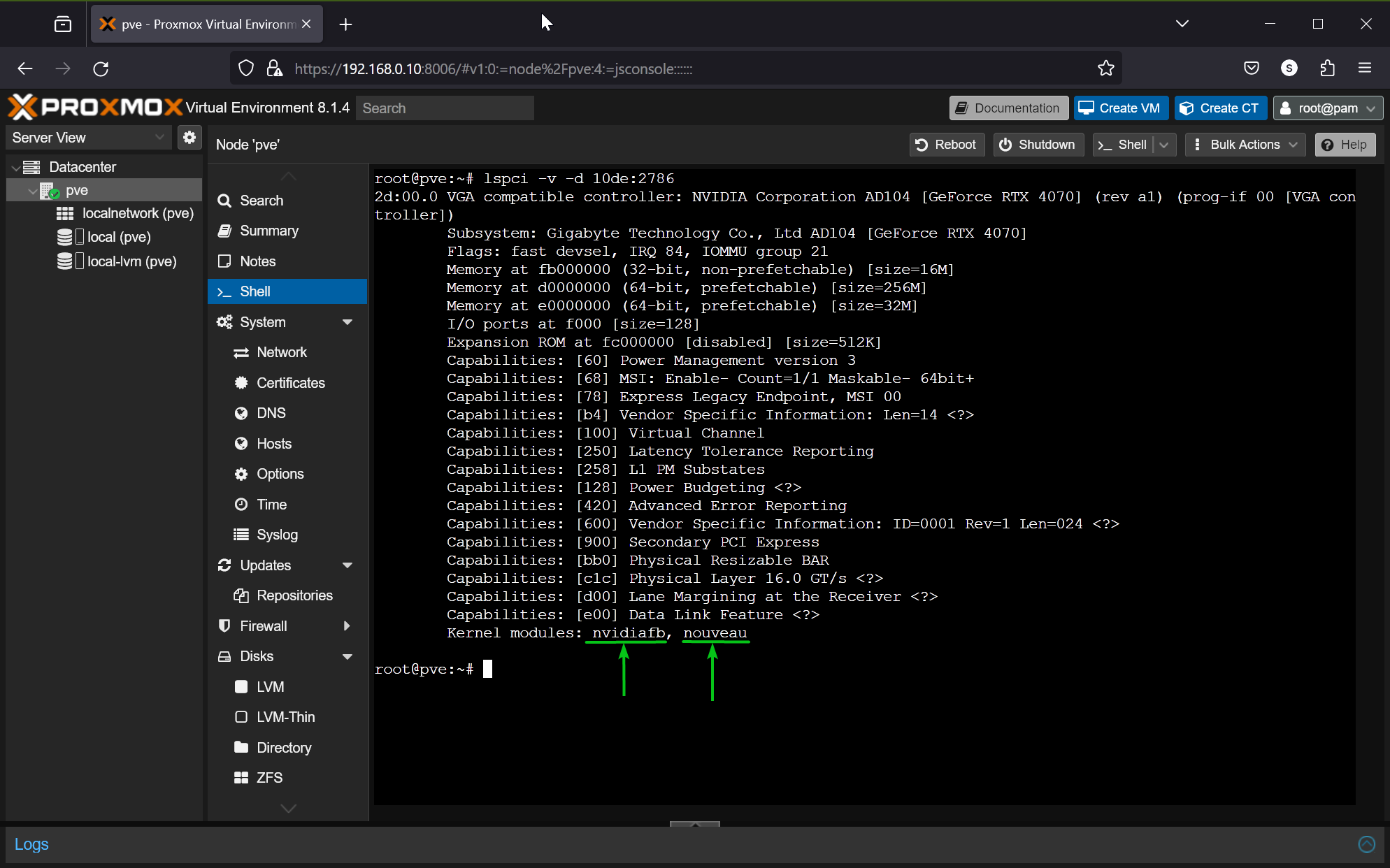

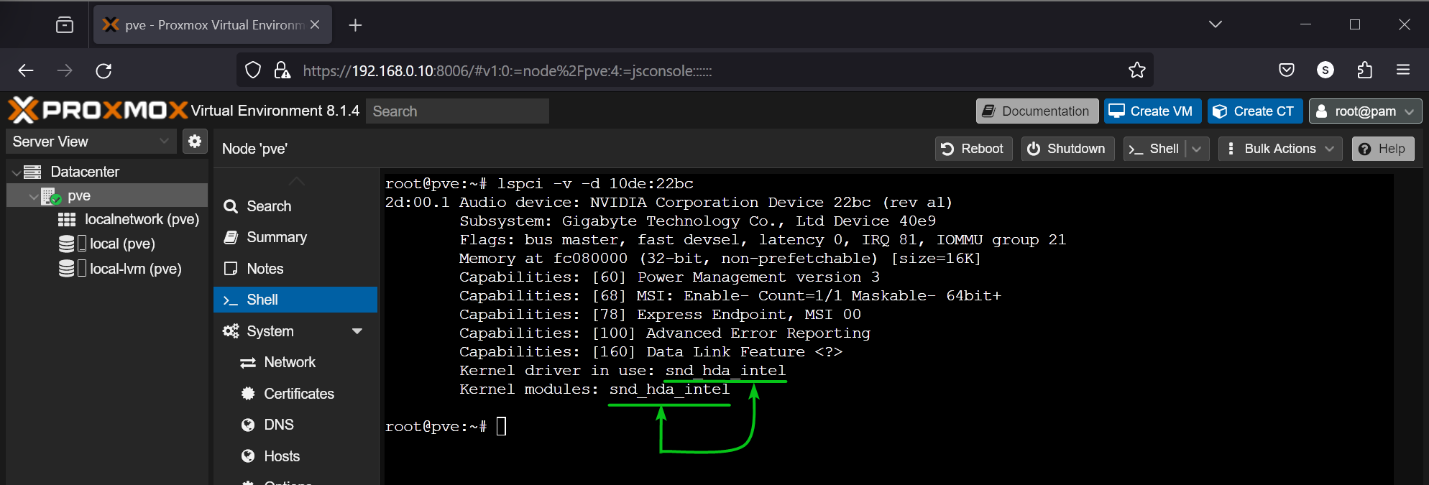

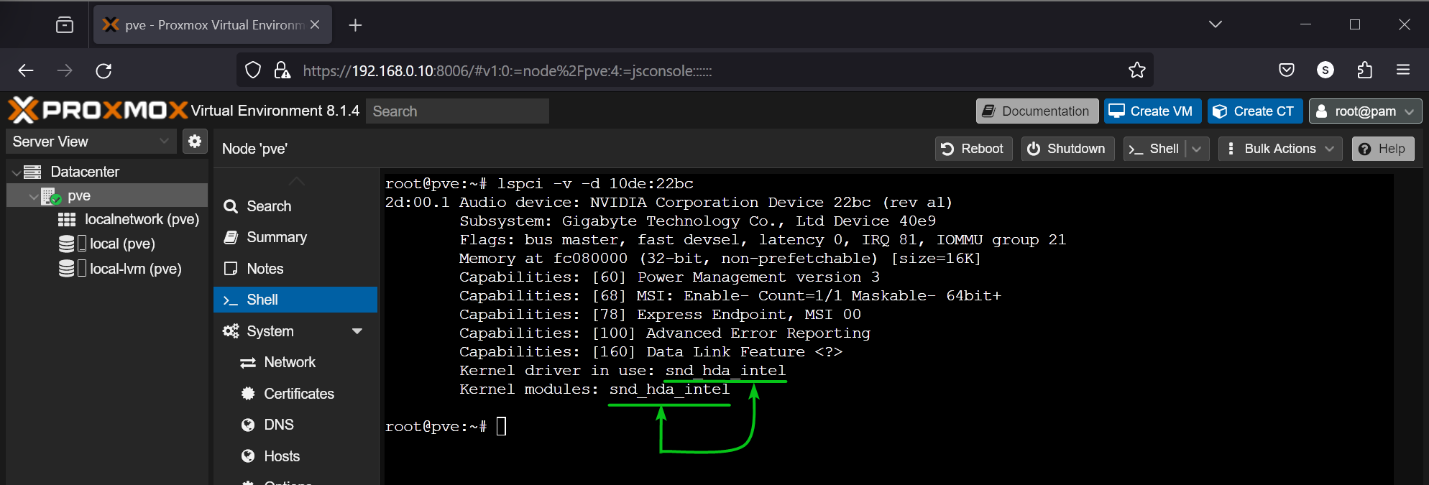

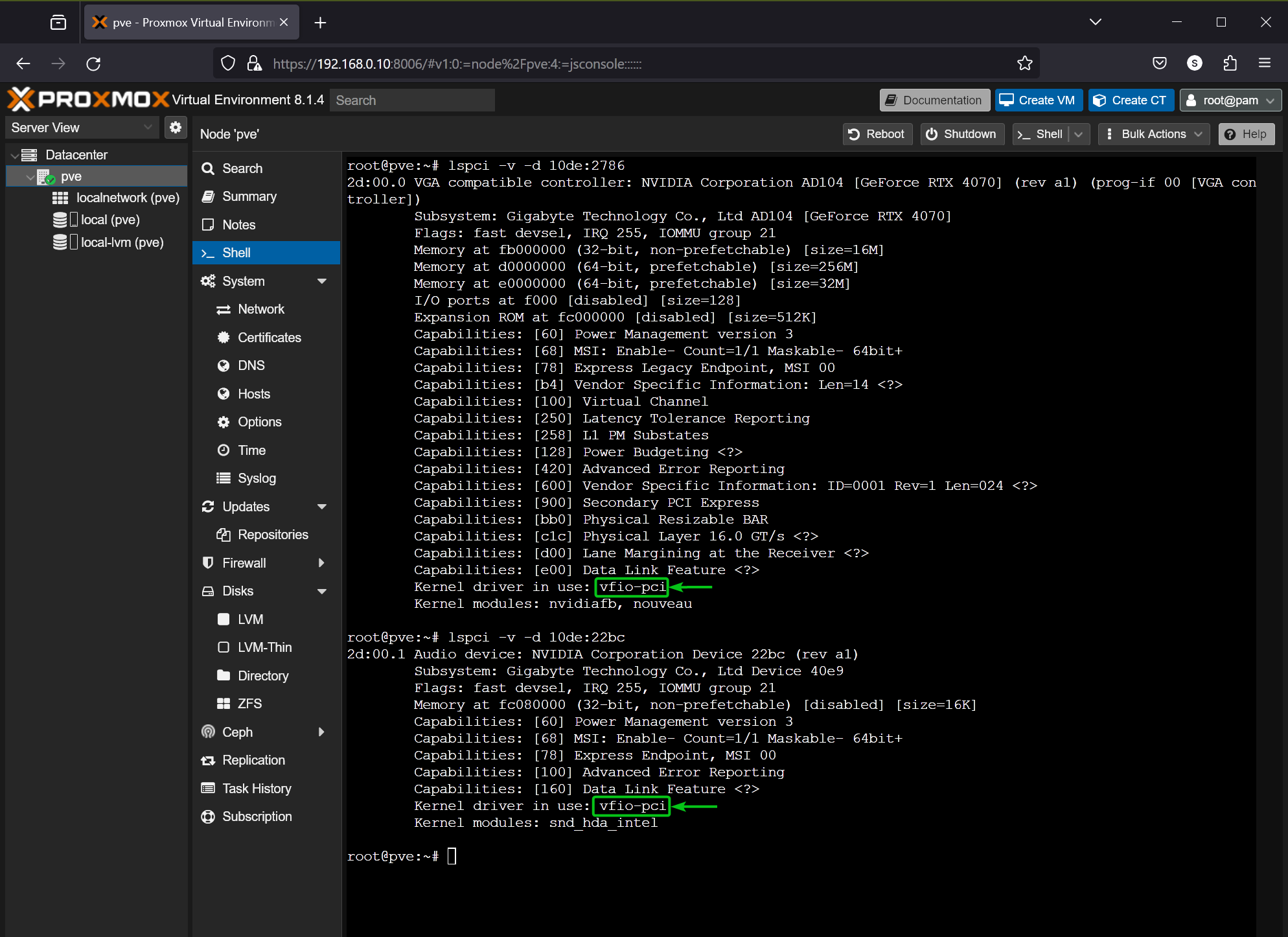

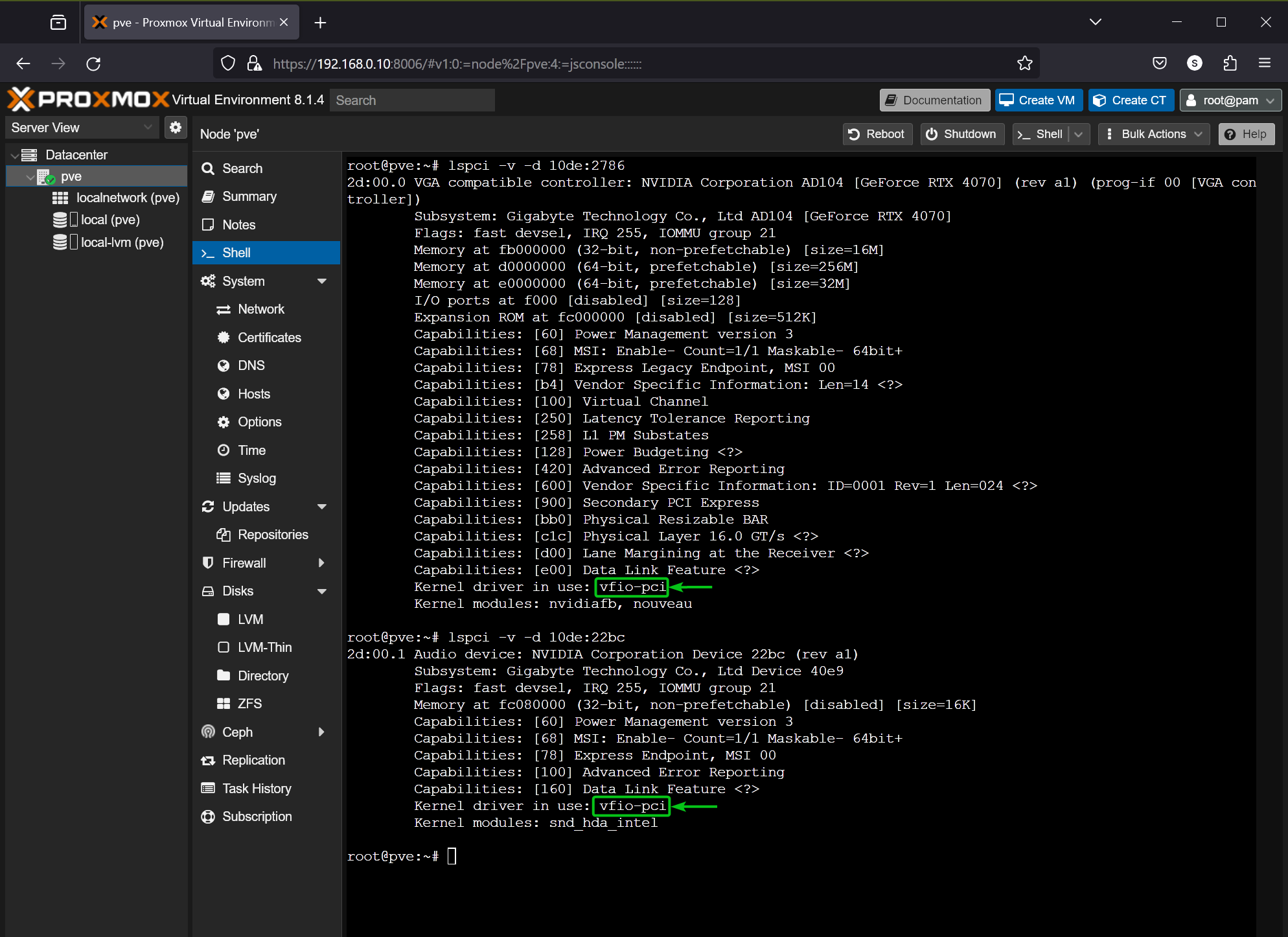

For instance, the seller ID and machine ID of my NVIDIA RTX 4070 GPU is 10de:2786 and it’s audio machine is 10de:22bc.

To search out the kernel module a PCI/PCIE machine 10de:2786 (my NVIDIA RTX 4070 GPU) is utilizing, run the lspci command as follows:

As you possibly can see, my NVIDIA RTX 4070 GPU is utilizing the nvidiafb and nouveau kernel modules by default. So, they will’t be handed to a Proxmox VE 8 digital machine (VM) at this level.

The Audio machine of my NVIDIA RTX 4070 GPU is utilizing the snd_hda_intel kernel module. So, it may well’t be handed on a Proxmox VE 8 digital machine at this level both.

So, to passthrough my NVIDIA RTX 4070 GPU and its audio machine on a Proxmox VE 8 digital machine (VM), I have to blacklist the nvidiafb, nouveau, and snd_hda_intel kernel modules and configure my NVIDIA RTX 4070 GPU and its audio machine to make use of the vfio-pci kernel module.

Blacklisting Required Kernel Modules for PCI/PCIE Passthrough on Proxmox VE 8

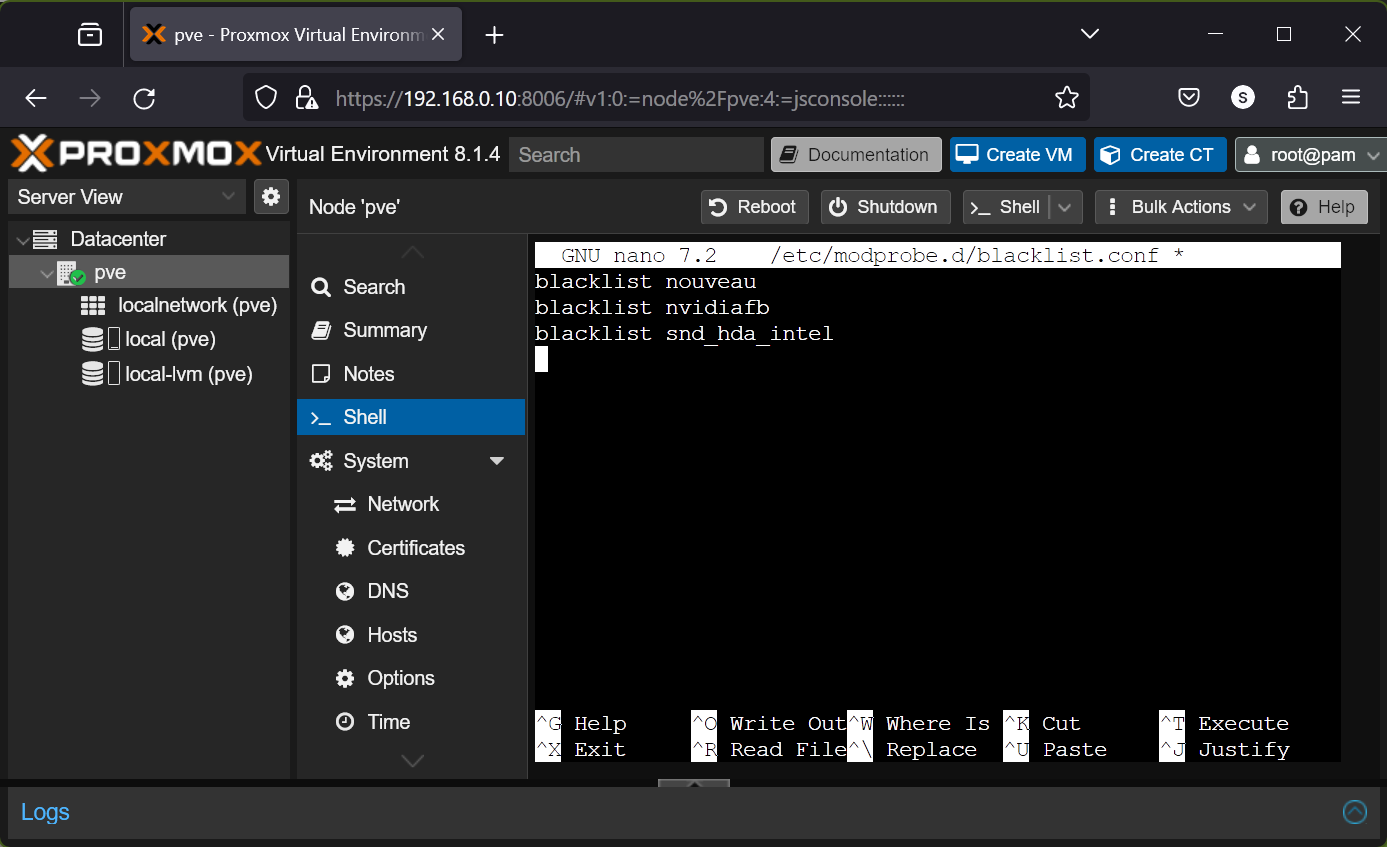

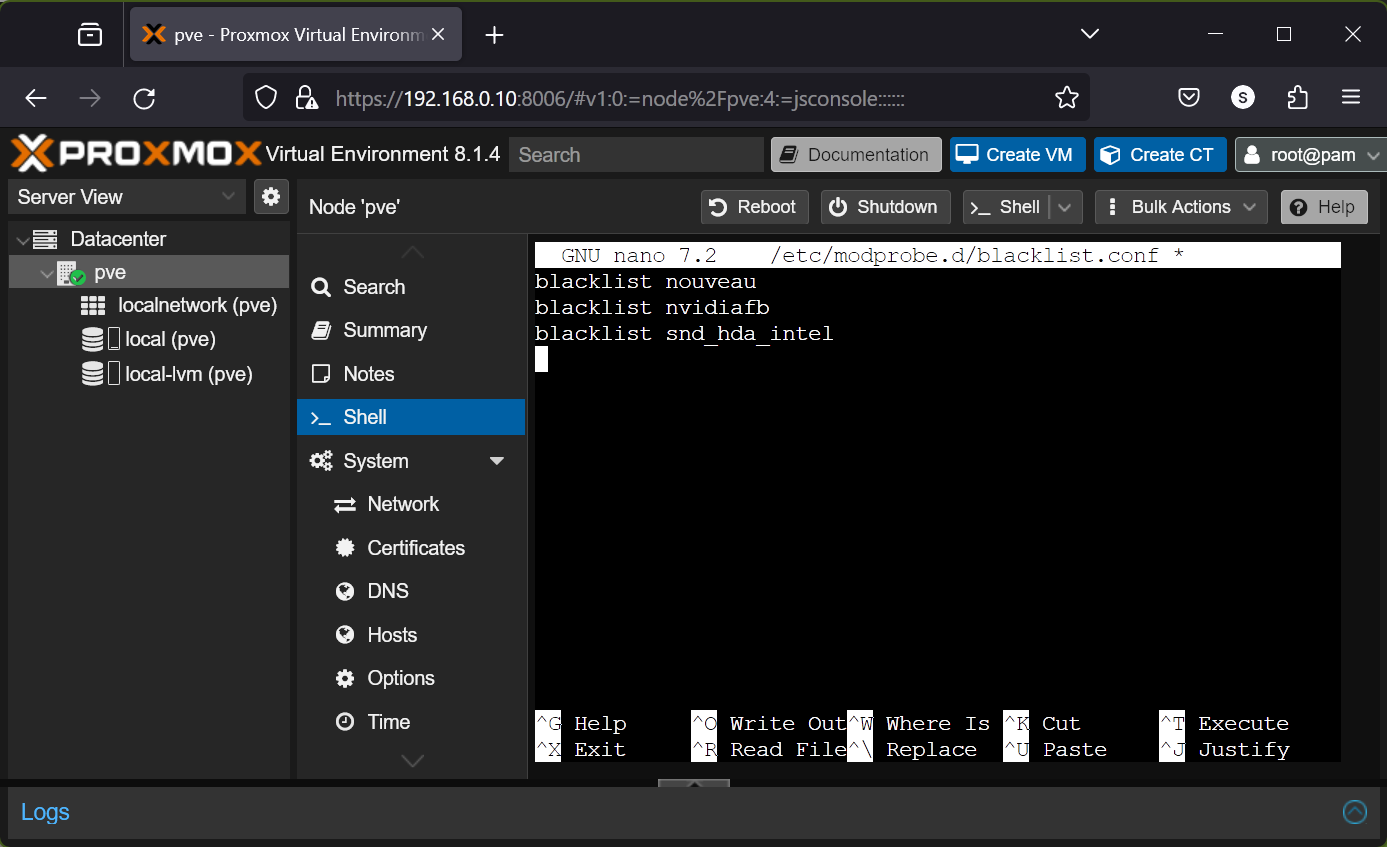

To blacklist kernel modules on Proxmox VE 8, open the /and so on/modprobe.d/blacklist.conf file with the nano textual content editor as follows:

$ nano /and so on/modprobe.d/blacklist.conf

To blacklist the kernel modules nouveau, nvidiafb, and snd_hda_intel kernel modules (to passthrough NVIDIA GPU), add the next strains within the /and so on/modprobe.d/blacklist.conf file:

blacklist nvidiafb

blacklist snd_hda_intel

When you’re accomplished, press

Configuring Your NVIDIA GPU to Use the VFIO Kernel Module on Proxmox VE 8

To configure the PCI/PCIE machine (i.e. your NVIDIA GPU) to make use of the VFIO kernel module, it’s essential to know their vendor ID and machine ID.

On this case, the seller ID and machine ID of my NVIDIA RTX 4070 GPU and its audio machine are 10de:2786 and 10de:22bc.

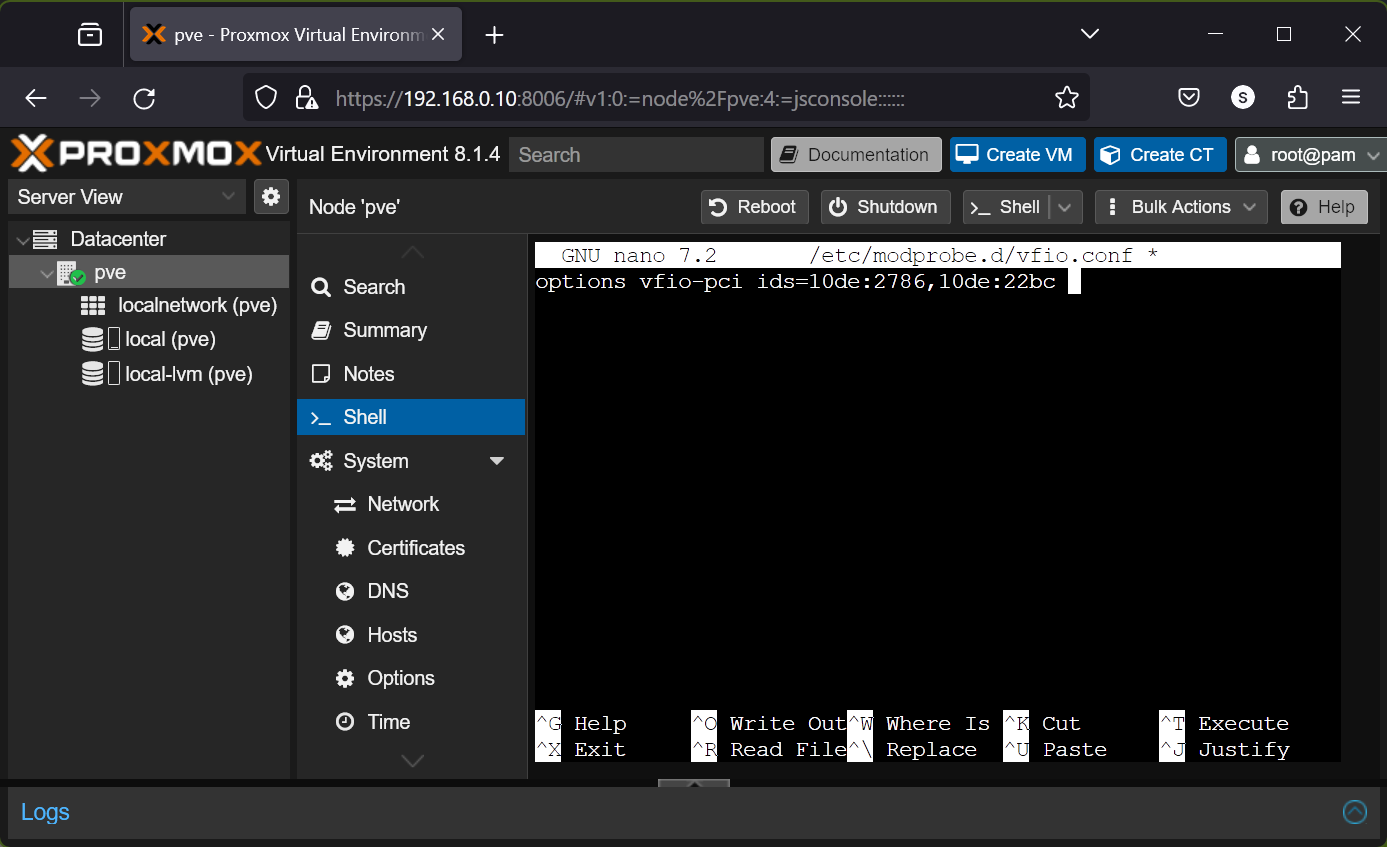

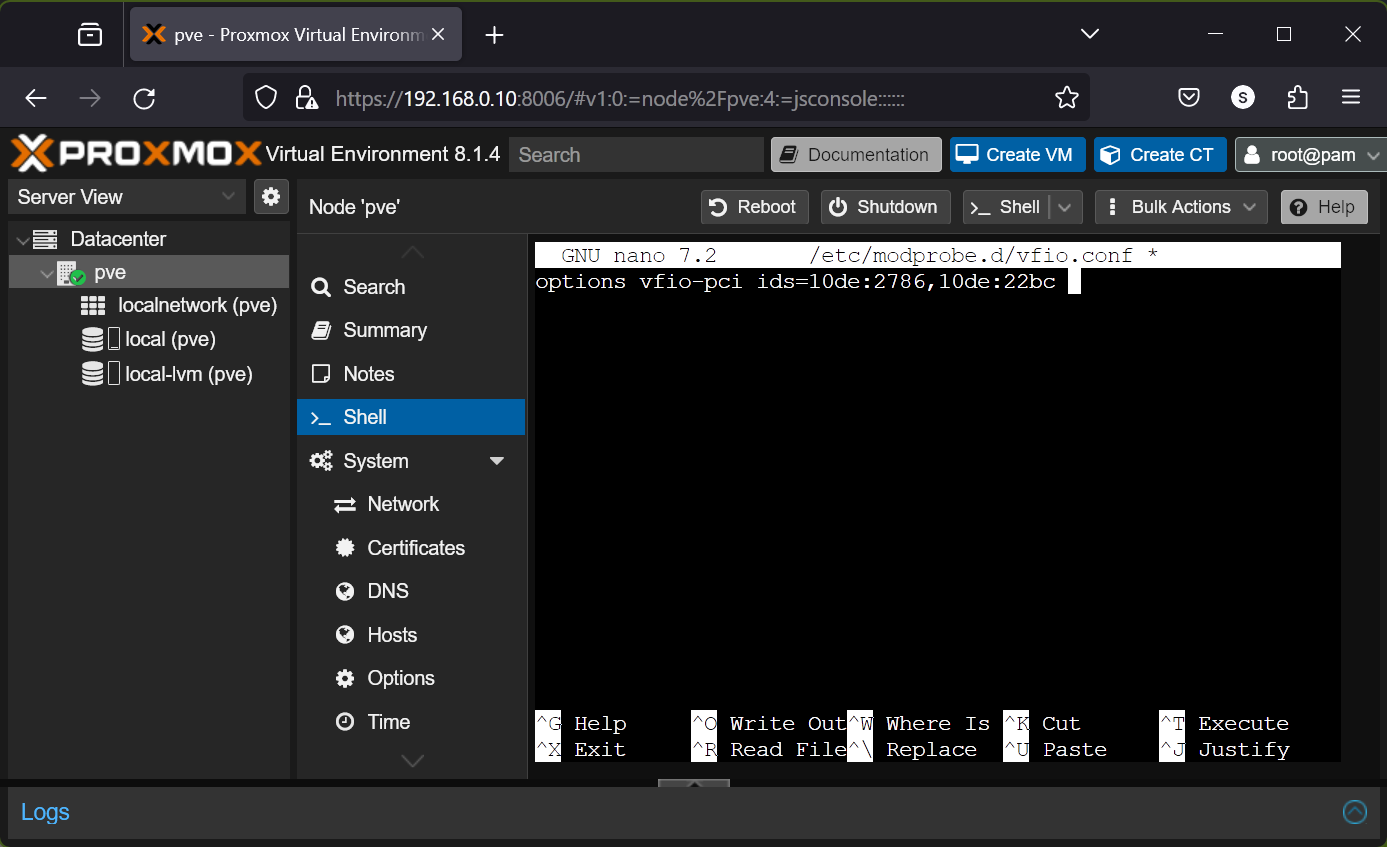

To configure your NVIDIA GPU to make use of the VFIO kernel module, open the /and so on/modprobe.d/vfio.conf file with the nano textual content editor as follows:

$ nano /and so on/modprobe.d/vfio.conf

To configure your NVIDIA GPU and its audio machine with the

choices vfio-pci ids=10de:2786,10de:22bc

When you’re accomplished, press

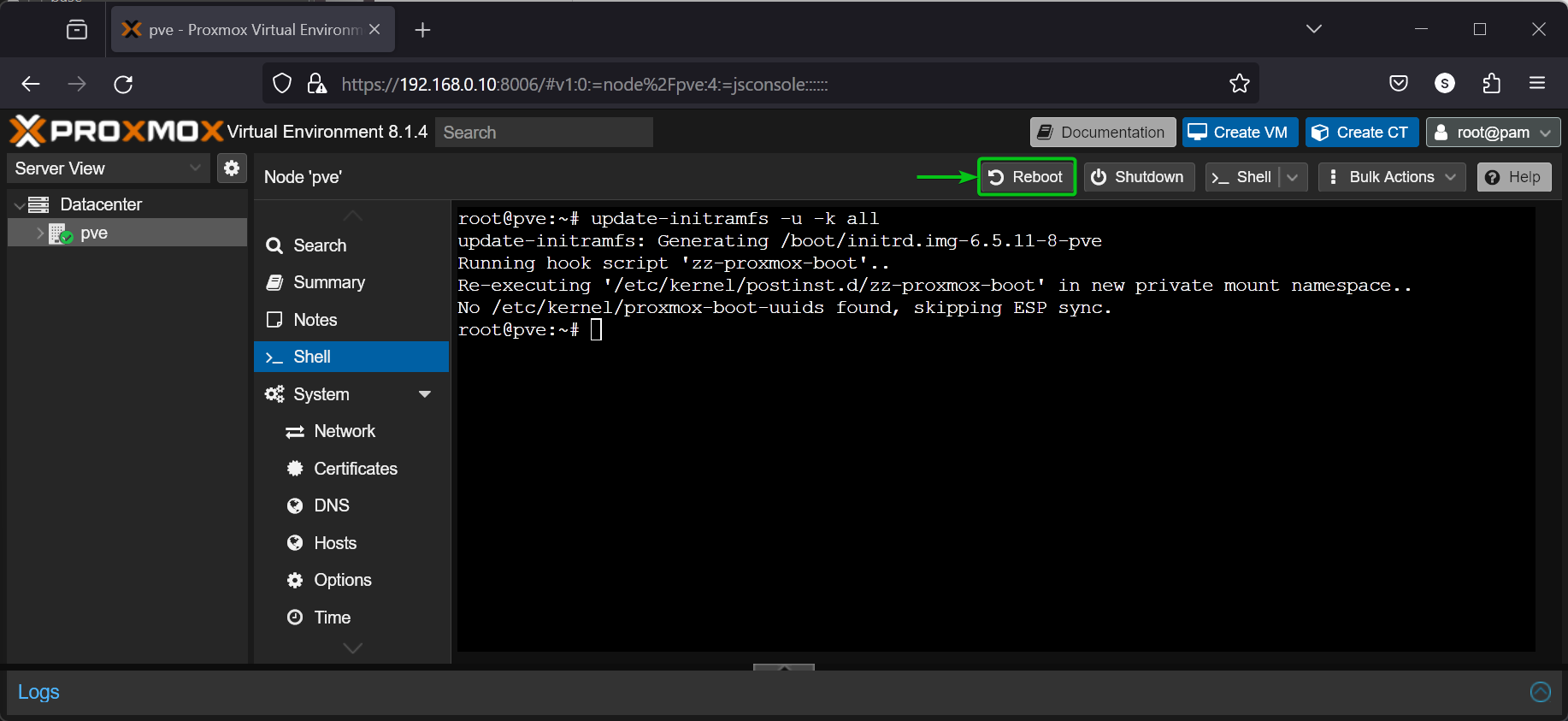

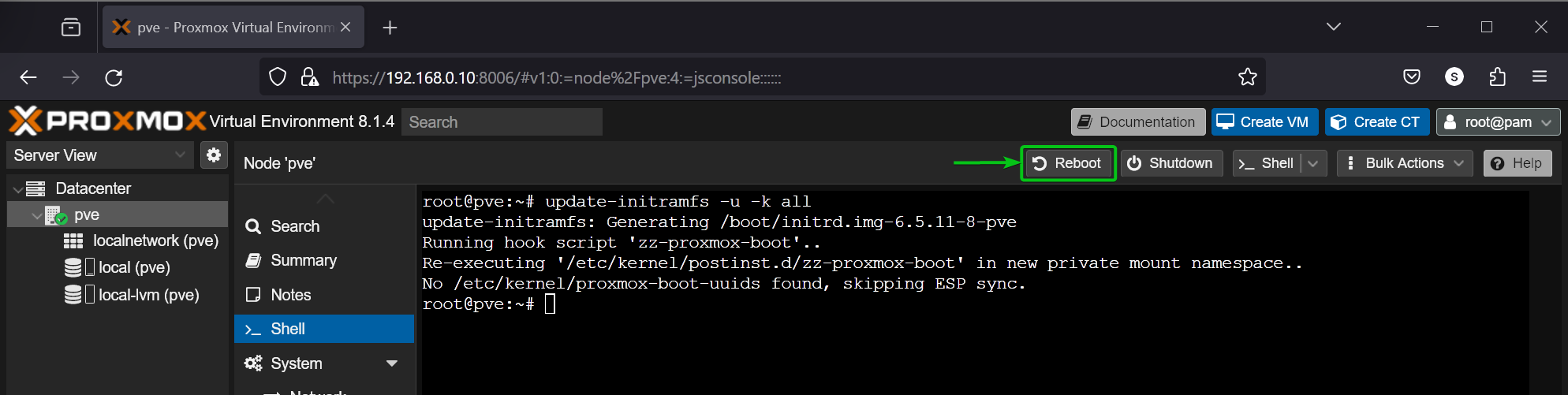

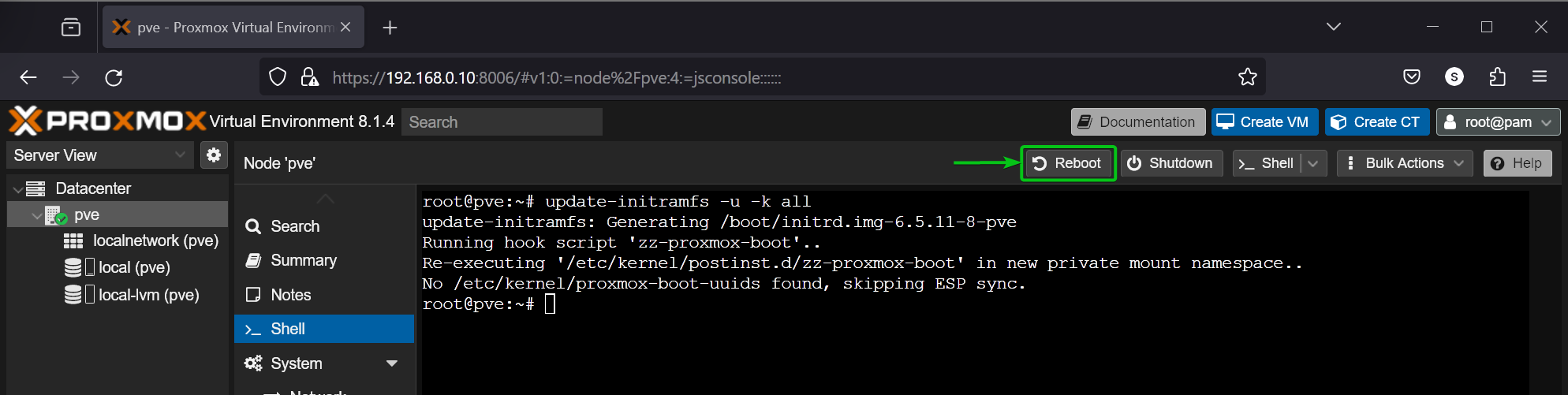

Now, replace the initramfs of Proxmove VE 8 with the next command:

$ update-initramfs -u -k all

As soon as initramfs is up to date, click on on Reboot to restart your Proxmox VE 8 server for the modifications to take impact.

As soon as your Proxmox VE 8 server boots, it’s best to see that your NVIDIA GPU and its audio machine (10de:2786 and 10de:22bc in my case) are utilizing the vfio-pci kernel module. Now, your NVIDIA GPU is able to be handed to a Proxmox VE 8 digital machine.

$ lspci -v -d 10de:22bc

Passthrough the NVIDIA GPU to a Proxmox VE 8 Digital Machine (VM)

Now that your NVIDIA GPU is prepared for passthrough on Proxmox VE 8 digital machines (VMs), you possibly can passthrough your NVIDIA GPU in your desired Proxmox VE 8 digital machine and set up the NVIDIA GPU drivers relying on the working system that you just’re utilizing on that digital machine as typical.

For detailed data on find out how to passthrough your NVIDIA GPU on a Proxmox VE 8 digital machine (VM) with completely different working techniques put in, learn one of many following articles:

- Easy methods to Passthrough an NVIDIA GPU to a Home windows 11 Proxmox VE 8 Digital Machine (VM)

- Easy methods to Passthrough an NVIDIA GPU to a Ubuntu 24.04 LTS Proxmox VE 8 Digital Machine (VM)

- Easy methods to Passthrough an NVIDIA GPU to a LinuxMint 21 Proxmox VE 8 Digital Machine (VM)

- Easy methods to Passthrough an NVIDIA GPU to a Debian 12 Proxmox VE 8 Digital Machine (VM)

- Easy methods to Passthrough an NVIDIA GPU to an Elementary OS 8 Proxmox VE 8 Digital Machine (VM)

- Easy methods to Passthrough an NVIDIA GPU to a Fedora 39+ Proxmox VE 8 Digital Machine (VM)

- Easy methods to Passthrough an NVIDIA GPU on an Arch Linux Proxmox VE 8 Digital Machine (VM)

- Easy methods to Passthrough an NVIDIA GPU on a Pink Hat Enterprise Linux 9 (RHEL 9) Proxmox VE 8 Digital Machine (VM)

Nonetheless Having Issues with PCI/PCIE Passthrough on Proxmox VE 8 Digital Machines (VMs)?

Even after attempting every thing listed on this article accurately, if PCI/PCIE passthrough nonetheless doesn’t give you the results you want, make sure to check out a few of the Proxmox VE PCI/PCIE passthrough methods and/or workarounds that you should utilize to get PCI/PCIE passthrough work in your {hardware}.

Conclusion

On this article, I’ve proven you find out how to configure your Proxmox VE 8 server for PCI/PCIE passthrough to be able to passthrough PCI/PCIE units (i.e. your NVIDIA GPU) to your Proxmox VE 8 digital machines (VMs). I’ve additionally proven you find out how to discover out the kernel modules that it’s essential to blacklist and find out how to blacklist them for a profitable passthrough of your required PCI/PCIE units (i.e. your NVIDIA GPU) to a Proxmox VE 8 digital machine. Lastly, I’ve proven you find out how to configure your required PCI/PCIE units (i.e. your NVIDIA GPU) to make use of the VFIO kernel modules, which can be a vital step for a profitable passthrough of your required PCI/PCIE units (i.e. your NVIDIA GPU) to a Proxmox VE 8 digital machine (VM).