Beware of AI-generated ‘TikDocs’ exploiting public trust in healthcare to market dubious supplements

**Summary:** As generative AI technology becomes more accessible, it poses significant risks, especially in the realm of healthcare. This article explores how AI-generated deepfake avatars on platforms like TikTok and Instagram manipulate public trust in medical professionals to peddle sketchy supplements and misleading health claims. Understanding the tactics of these “TikDocs” can help you navigate online misinformation and avoid harmful scams.

Understanding the Threat of AI-Generated Misleading Content

Once confined to research labs, generative AI is now used by anyone, including those with malicious intent. Deepfake technology can create incredibly lifelike videos, images, and audio, not just for entertainment but also for identity theft and scams.

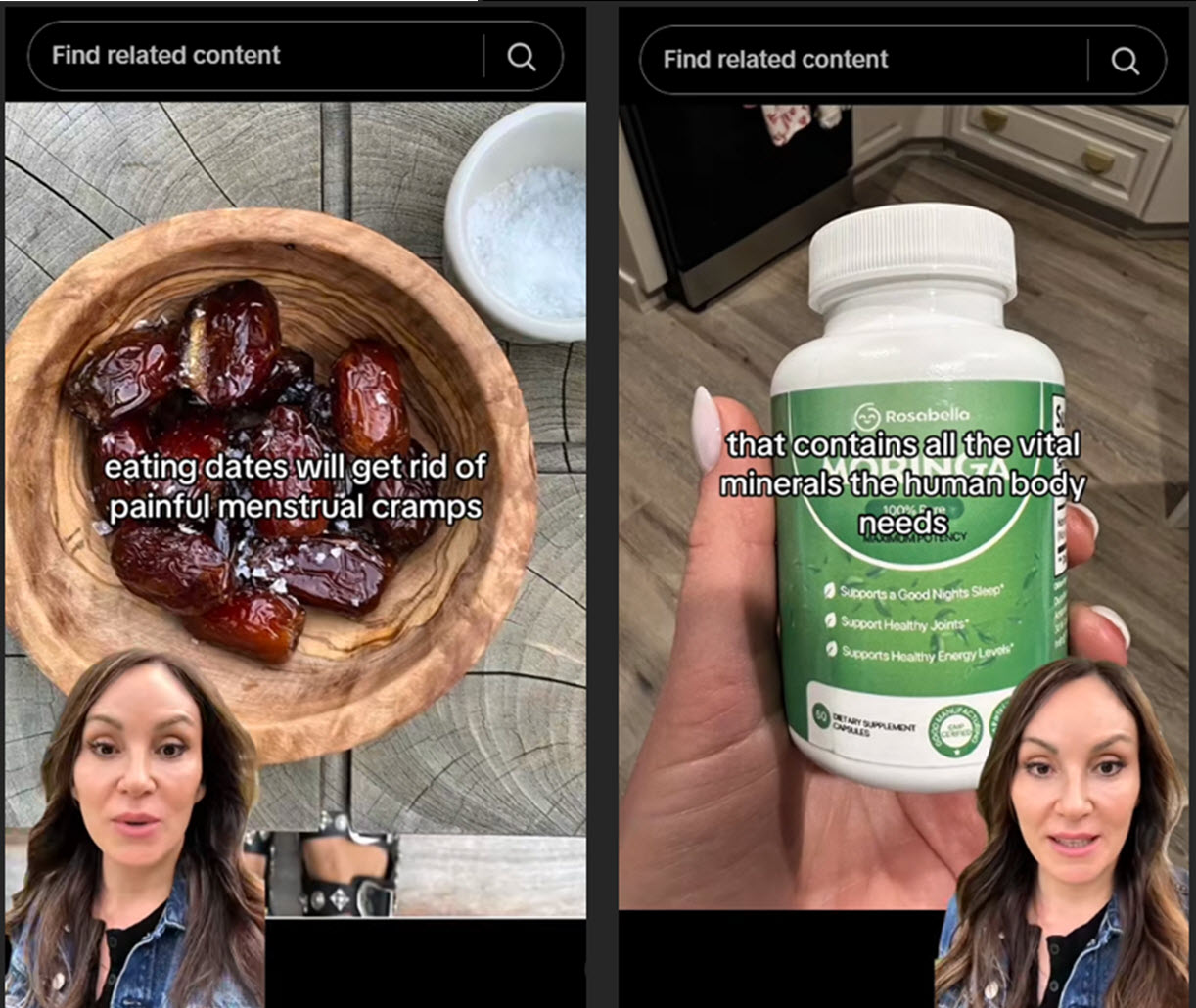

Recent ESET research uncovered a TikTok and Instagram campaign where AI-generated avatars impersonated health professionals like gynecologists and dietitians. These polished videos masquerade as expert medical advice, ultimately misleading viewers into making risky purchases of dubious wellness products.

The Mechanics of Deception

Each video typically follows a script: a talking avatar offers “expert” health advice with a focus on “natural” remedies, steering viewers toward specific products. This exploitation of trust in the medical field is both unethical and alarmingly effective.

For example, one “doctor” promotes a “natural extract” as a miracle cure for weight loss, directing viewers to an Amazon page selling unrelated products. Other videos push unapproved medications, hijacking the likeness of reputable doctors.

The Role of AI in Facilitating Misinformation

The videos are crafted using legitimate AI tools that transform simple footage into refined avatars. While these tools can benefit influencers, they can also foster deception—where marketing gimmicks morph into vessels for falsehoods.

The implications extend beyond just fraudulent supplements, risking public skepticism in genuine medical advice, thereby delaying necessary treatments.

Identifying AI-Generated Deception

With AI technology on the rise, distinguishing between authentic and fabricated content becomes increasingly challenging. Here are tips to identify deepfake videos:

- Mismatched lip movements or unnatural facial expressions.

- Visual glitches, like blurriness or sudden lighting changes.

- A robotic, overly polished voice that feels unnatural.

- Check profiles—new accounts with few followers can be suspicious.

- Avoid hyperbolic claims like “miracle cures” lacking credible sources.

- Always verify health claims with reputable medical resources and report suspicious content.

As AI tools continue to evolve, enhancing our digital literacy and developing technological safeguards becomes imperative to protect against misinformation that could jeopardize both health and finances.

FAQ

What are deepfakes?

Deepfakes use artificial intelligence to create hyper-realistic fake videos or audio, often impersonating real people. They’re widely used for entertainment but can also be manipulated for scams.

How can I identify a deepfake video?

Look for inconsistencies in lip-syncing, unnatural facial expressions, or any audio-visual glitches. Always verify the source of health information.

Why are deepfakes dangerous in healthcare?

Deepfakes can erode trust in authentic medical advice, leading people to make harmful health decisions based on misleading information.