GPFS stands for Common Parallel File System is a cluster file system developed by IBM. It’s named IBM Storage Scale and beforehand IBM Spectrum Scale. It’s a cluster file system that gives concurrent entry to a single file system or set of file programs from a number of nodes. The nodes might be SAN connected, community connected, a combination of SAN connected and community connected. This permits excessive efficiency entry to this widespread set of information to help a scale-out answer or to offer a excessive availability platform.

It has many options together with knowledge replication, coverage based mostly storage administration, and multi-site operations, and so forth,. It could actually share knowledge inside a location (LAN) or throughout vast space community (WAN) connections.

It helps very enormous variety of nodes (9500+) as per IBM FAQ web page and it’s utilized by lots of the world’s largest business firms, in addition to a few of the supercomputers.

Additionally, a GPFS cluster might be arrange with a mixture of cross-platforms comparable to Linux and AIX. It helps Linux (RHEL, Ubuntu and SUSE), AIX and Home windows platforms however the variety of nodes supported varies based mostly on the working system.

It helps 2048 disks in a file system, most supported GPFS disk dimension is 2TB, and dimension of the filesystem is 2^99 bytes.

On this information, we’ll show tips on how to set up GPFS cluster File System 5.1.8 on RHEL system.

- Half-1 How one can Set up and Configure GPFS Cluster on RHEL

- Half-2 How one can Create GPFS Filesystem on RHEL

- Half-3 How one can Lengthen a GPFS Filesystem on RHEL

- Half-4 How one can Export a GPFS Filesystem in RHEL

- Half-5 How one can Improve GPFS Cluster on RHEL

Our lab setup:

- Two Node GPFS cluster with RHEL 8.8

- Node1 – 2ggpfsnode01 – 192.168.10.50

- Node2 – 2ggpfsnode01 – 192.168.10.51

- IBM Storage Scale Normal Version License

Conditions for GPFS

- Minimal three nodes required to setup a GPFS cluster with default Node quorum algorithm.

- If you wish to run a small GPFS cluster (a two-node GPFS cluster system), you want at the very least three tiebreaker disks, which is named a Node quorum with tiebreaker disks.

- Two community interfaces are required, one for host communication and one other for GPFS cluster intercommunication (Personal IP).

- Ensure the ‘/var’ partition has a most dimension of ’10GB’ as GPFS associated binaries and packages can be positioned there.

- All SAN-attached disks ought to be mapped in a shared mode between nodes, together with tiebreaker disks.

- Obtain IBM Storage Scale bundle from Repair Central. It requires energetic IBM account and license for GPFS.

- Verify kernel compatibility earlier than putting in/upgrading a particular model of GPFS on a RHEL system. This data might be discovered IBM Storage Scale QA web page.

1) Configuring /and so forth/hosts

Though DNS identify decision is in place. It’s beneficial to configure hosts recordsdata on each nodes to permit quicker identify decision (On each the Nodes).

cat /and so forth/hosts #######Host IPs Configuration Begin############ 192.168.10.50 2ggpfsnode01.native 2ggpfsnode01 192.168.10.51 2ggpfsnode02.native 2ggpfsnode02 #######Host IPs Configuration END#############

Equally configure the hosts file for the personal IP (On each the Nodes).

cat /and so forth/hosts #######GPFS Personal IPs Begin########### 192.168.20.100 2ggpfsnode01-gpfs 192.168.20.101 2ggpfsnode02-gpfs #######GPFS Personal IPs END#############

2) Configuring Personal IP in Community Interface

GPFS cluster communication goes by way of personal IP between nodes, so configure personal IP as one other community interface as proven under. (On each the Nodes).

vi /and so forth/sysconfig/network-scripts/ifcfg-ens256 Identify=ens256 DEVICE=ens256 ONBOOT=sure USERCTL=no BOOTPROTO=static NETMASK=255.255.255.0 IPADDR=192.168.20.100 PEERDNS=no

2a) Restarting Community

As soon as the personal IP is configured, restart the community interface utilizing the under command (On each the Nodes).

ifdown ens256

ifup ens256

2b) Checking IP Output

Verify if the personal IP is configured on one other community interface (On each the Nodes).

ip a 1: lo:mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 hyperlink/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft eternally preferred_lft eternally inet6 ::1/128 scope host valid_lft eternally preferred_lft eternally 2: ens192: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000 hyperlink/ether 08:00:27:97:13:2e brd ff:ff:ff:ff:ff:ff inet 192.168.10.50/24 brd 192.168.10.255 scope world dynamic noprefixroute ens192 valid_lft eternally preferred_lft eternally inet6 fe80::a00:27ff:fe97:132e/64 scope hyperlink valid_lft eternally preferred_lft eternally 3: ens256: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000 hyperlink/ether 08:00:27:97:13:9d brd ff:ff:ff:ff:ff:ff inet 192.168.20.100/24 brd 192.168.20.255 scope world dynamic noprefixroute ens256 valid_lft eternally preferred_lft eternally inet6 fe80::a00:27ff:fe97:132e/64 scope hyperlink valid_lft eternally preferred_lft eternally

2c) Setup password-less authentication

For any cluster setup, password-less authentication between cluster nodes is necessary for numerous operations, so we’ll configure the identical. In GPFS, every gpfs cluster node can run ssh & scp instructions on all different nodes utilizing root consumer to permit distant administration of different nodes.

[root@2ggpfsnode01 ~]# ssh-copy-id root@2ggpfsnode01 #For Self on Node-1 [root@2ggpfsnode01 ~]# ssh-copy-id root@2ggpfsnode02 #For Different Node (From Node-1 to Node-2) [root@2ggpfsnode02 ~]# ssh-copy-id root@2ggpfsnode01 #For Different Node (From Node-2 to Node-1) [root@2ggpfsnode02 ~]# ssh-copy-id root@2ggpfsnode02 #For Self on Node-2

second) Validating password-less authentication

Verify if password-less authentication is working as anticipated for self and different nodes.

[root@2ggpfsnode01 ~]# ssh 2ggpfsnode01 date Sat Nov 18 08:13:20 IST 2023 [root@2ggpfsnode01 ~]# ssh 2ggpfsnode02 date Sat Nov 18 08:13:21 IST 2023 [root@2ggpfsnode02 ~]# ssh 2ggpfsnode01 date Sat Nov 18 08:13:25 IST 2023 [root@2ggpfsnode02 ~]# ssh 2ggpfsnode02 date Sat Nov 18 08:13:27 IST 2023

3) Putting in GPFS RPMs

Now the surroundings is prepared, let’s proceed with the GPFS set up. It may be put in in two strategies like Handbook or set up toolkit, however on this article we’ll present you the guide methodology.

Set up the next prerequisite packages utilizing the yum bundle supervisor.

# yum set up kernel-devel cpp gcc gcc-c++ binutils elfutils-libelf-devel make kernel-headers nfs-utils ethtool python3 perl rpm-build

Extracting the archive file:

# tar -xf Scale_std_install_5.1.8.0_x86_64.tar.gz

Confirm that the self-extracting program has executable permissions. In any other case, you can also make it executable by issuing the ‘chmod +x’ command.

# ls -lh -rw-r--r-- 1 root root 2.0K Nov 18 07:00 README -rw-r----- 1 root root 1.1G Nov 18 07:20 Scale_std_install_5.1.8.0_x86_64.tar.gz -rw-r--r-- 1 root root 1.6K Nov 18 07:25 SpectrumScale_public_key.pgp -rwxr-xr-x 1 root root 1.1G Nov 18 07:25 Spectrum_Scale_Standard-5.1.8.0-x86_64-Linux-install

When operating the under command. Initially, it extracts License Acceptance Course of Instruments to /usr/lpp/mmfs/5.1.8.0 and invokes it, which prompts for License Acceptance, enter '1' and hit 'Enter' to extract the required product RPMs to /usr/lpp/mmfs/5.1.8.0. It checks if the identical dir was beforehand created throughout one other extraction. If sure, will probably be eliminated to keep away from conflicts with those being extracted. Additionally, it removes License Acceptance Course of Instruments as soon as product RPMs extracted.

Additionally, it gives an instruction to put in and configure IBM Storage Scale by way of the Toolkit methodology.

# sh Spectrum_Scale_Standard-5.1.8.0-x86_64-Linux-install

Navigate to the RPM file location so as to set up it.

# cd /usr/lpp/mmfs/5.1.8.0/gpfs_rpms

Set up the GPFS RPMs now.

# rpm -ivh gpfs.base*.rpm gpfs.gpl*rpm gpfs.license.std*.rpm gpfs.gskit*rpm gpfs.msg*rpm gpfs.docs*rpm

3a) Exporting PATH surroundings Variable

Export the PATH surroundings variable for the basis consumer on every node as proven under, this permits a consumer to execute IBM Storage Scale instructions with out altering listing to '/usr/lpp/mmfs/bin'.

# echo "export PATH=$PATH:$HOME/bin:/usr/lpp/mmfs/bin" > /root/.bash_profile # supply /root/.bash_profile

3b) Putting in GPFS Portability Layer

Run the under command to construct the GPFS portability layer. The GPFS portability layer is a loadable kernel module that enables the GPFS daemon to work together with the working system.

This command have to be executed each time a brand new kernel is put in and might be automated utilizing the 'autoBuildGPL' choice, which can be proven to you on this article or the subsequent one.

# mmbuildgpl

3c) Creating GPFS cluster

It is a very small setup (a two-node cluster), so we’ll add each nodes with the function of cluster supervisor and the quorum supervisor. To take action, add the GPFS hostname to a textual content file as proven under.

Syntax:

NodeName:NodeDesignations:AdminNodeName:NodeComment

Within the following file we’ll solely add ‘Node Identify’ and ‘Node Designations’ and the remainder of the parameters might be added as a part of GPFS cluster creation command.

# cd /usr/lpp/mmfs # echo "2ggpfsnode01-gpfs:manager-quorum 2ggpfsnode02-gpfs:manager-quorum" > nodefile.txt

Now every part is prepared. Lastly, run the mmcrcluster command to create a cluster.

# mmcrcluster -N /usr/lpp/mmfs/nodefile.txt --ccr-enable -r /usr/bin/ssh -R /usr/bin/scp -C 2gtest-cluster -A

mmcrcluster: Performing preliminary node verification ...

mmcrcluster: Processing quorum and different crucial nodes ...

mmcrcluster: Finalizing the cluster knowledge constructions ...

mmcrcluster: Command efficiently accomplished

mmcrcluster: Warning: Not all nodes have correct GPFS license designations.

Use the mmchlicense command to designate licenses as wanted.

mmcrcluster: [I] The cluster was created with the tscCmdAllowRemoteConnections configuration parameter set to "no". If a distant cluster is established with one other cluster whose launch stage (minReleaseLevel) is lower than 5.1.3.0, change the worth of tscCmdAllowRemoteConnections on this cluster to "sure".

mmcrcluster: Propagating the cluster configuration knowledge to all

affected nodes. That is an asynchronous course of.Particulars:

- /usr/lpp/mmfs/nodefile.txt – This file incorporates node data’s.

- –ccr-enable – Cluster Configuration Repository (CCR) to keep up cluster configuration data.

- -r – RemoteShellCommand (/usr/bin/ssh)

- -R – RemoteFileCopy (/usr/bin/scp)

- -C – ClusterName (2gtest-cluster)

- -N – Enter a Node identify and node designations or Path of the file that incorporates node & designations data.

- -A – Specifies that GPFS daemons are to be mechanically began when nodes come up.

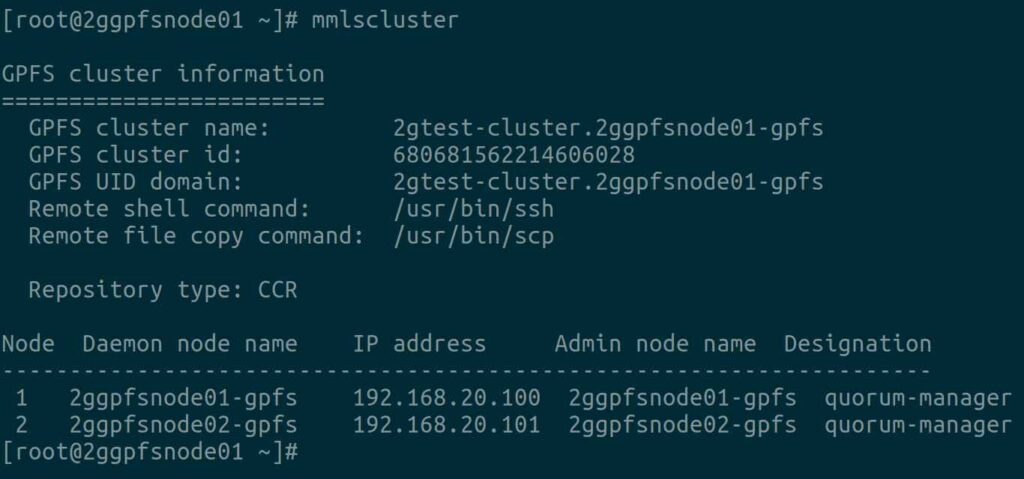

To confirm the cluster data created within the above steps, run:

# mmlscluster

3d) Creating Tiebreaker Disks

As mentioned to start with of the article. When operating on small GPFS clusters, you would possibly need to have the cluster stay on-line with just one surviving node. To realize this, that you must add a tiebreaker disk to the quorum configuration. Node quorum with tiebreaker disks permits you to run with as little as one quorum node obtainable so long as you’ve gotten entry to a majority of the quorum disks.

It received’t maintain any knowledge, so get '3x1 GB' disks from SAN (Storage Space Community) in shared mode for each nodes and observe under steps.

Get a LUN ID’s from the Storage Crew and scan the SCSI disks (On each the Nodes).

# for host in `ls /sys/class/scsi_host`; do echo "Scanning $host...Accomplished"; echo "- - -" > /sys/class/scsi_host/$host/scan; achieved

After the scan, verify if the given LUNs are found on the OS stage.

# lsscsi --scsi --size | grep -i [Last_Five_Digit_of_LUN]

Create 'NSD' file for Tiebreaker Disks as proven under. Please just remember to are utilizing the proper block units.

# echo "/dev/sde:::::tiebreak1 /dev/sdf:::::tiebreak2 /dev/sdg:::::tiebreak3" > /usr/lpp/mmfs/tiebreaker_Disks.nsd

Create Community Shared Disks (NSDs) utilized by GPFS utilizing the mmcrnsd command as proven under:

# mmcrnsd -F /usr/lpp/mmfs/tiebreaker_Disks.nsd mmcrnsd: Processing disk sde mmcrnsd: Processing disk sdf mmcrnsd: Processing disk sdg mmcrnsd: Propagating the cluster configuration knowledge to all affected nodes. That is an asynchronous course of.

If the mmcrnsd command executes efficiently, a brand new line is created and the consumer inputs are hashed out as proven under.

# cat /usr/lpp/mmfs/tiebreaker_Disks.nsd # /dev/sde:::::tiebreaker1 tiebreak1:::dataAndMetadata:-1::system # /dev/sdf:::::tiebreaker2 tiebreak2:::dataAndMetadata:-1::system # /dev/sdg:::::tiebreaker3 tiebreak3:::dataAndMetadata:-1::system

Run the mmchconfig command so as to add the tiebreaker disks data within the GPFS cluster configuration.

# mmchconfig tiebreakerDisks="tiebreaker1;tiebreaker2;tiebreaker3" mmchconfig: Command efficiently accomplished mmchconfig: Propagating the cluster configuration knowledge to all affected nodes. That is an asynchronous course of.

3e) Beginning Cluster

GPFS cluster might be began utilizing the mmstartup command. Use '-a' change to begin the cluster in all node concurrently.

mmstartup -a Thu Nov 18 10:45:22 +04 2023: mmstartup: Beginning GPFS ...

The mmgetstate command shows the state of the GPFS daemon on a number of nodes. The nodes haven’t but joined and shaped the cluster, that’s why it exhibits as 'arbitrating'.

# mmgetstate -aL

Node quantity Node identify Quorum Nodes up Whole nodes GPFS state Remarks

-----------------------------------------------------------------------------------------

1 2ggpfsnode01-gpfs arbitrating quorum node

2 2ggpfsnode02-gpfs arbitrating quorum node

It’s now joined efficiently and GPFS state exhibits as 'energetic':

# mmgetstate -aL

Node quantity Node identify Quorum Nodes up Whole nodes GPFS state Remarks

-----------------------------------------------------------------------------------------

1 2ggpfsnode01-gpfs 1* 2 2 energetic quorum node

2 2ggpfsnode02-gpfs 1* 2 2 energetic quorum node

3f) Accepting License

Use the mmchlicense command to designate licenses as wanted. Additionally, it controls the kind of GPFS license related to the nodes within the cluster.

# mmchlicense server --accept -N 2ggpfsnode01-gpfs,2ggpfsnode02-gpfs The next nodes can be designated as possessing server licenses: 2ggpfsnode01-gpfs 2ggpfsnode02-gpfs mmchlicense: Command efficiently accomplished mmchlicense: Propagating the cluster configuration knowledge to all affected nodes. That is an asynchronous course of.

Last Ideas

I hope you realized tips on how to set up and configure a GPFS Cluster File System on a RHEL system.

On this article, we coated GPFS Cluster set up and configuration, together with Cluster Creation, NSD Creation and Tiebreaker disks addition.

When you’ve got any questions or suggestions, be at liberty to remark under.